Mastering Pydantic - Part 3: Types, Unions, and Serialization

Serialization, type precision, and union strategies for data handling.

In Part 1 and Part 2, we explored Pydantic's foundational concepts and advanced validation techniques. Now we'll dive into Pydantic's sophisticated type system and unions, and serialization—essential tools for building production-ready ML/AI applications that handle diverse data sources and complex type conversions.

Modern AI applications often need to process data from multiple sources: databases with specific connection strings, APIs with various data formats, payment systems with sensitive information, and ML models that require precise type conversions. Pydantic's advanced type system provides specialized types for these scenarios while maintaining the same validation guarantees you've come to expect.

This final part covers the advanced features that make Pydantic particularly powerful for production systems: type conversions, serialization/deserialization, union types for handling multiple formats, specialized network types, and Pydantic's extensive library of domain-specific types.

With that context in mind, let’s start by looking at Pydantic’s custom types, which cover many common scenarios in application and ML/AI development.

Pydantic Custom Types

Beyond the standard types like integers, floats, lists, or strings, Pydantic includes custom types for common use cases in application development, many of which are particularly useful in ML/AI contexts. I’ll cover a few of them here—the list is extensive—but you can explore the complete set in the official docs.

Secrets: Safe Handling of Sensitive Data

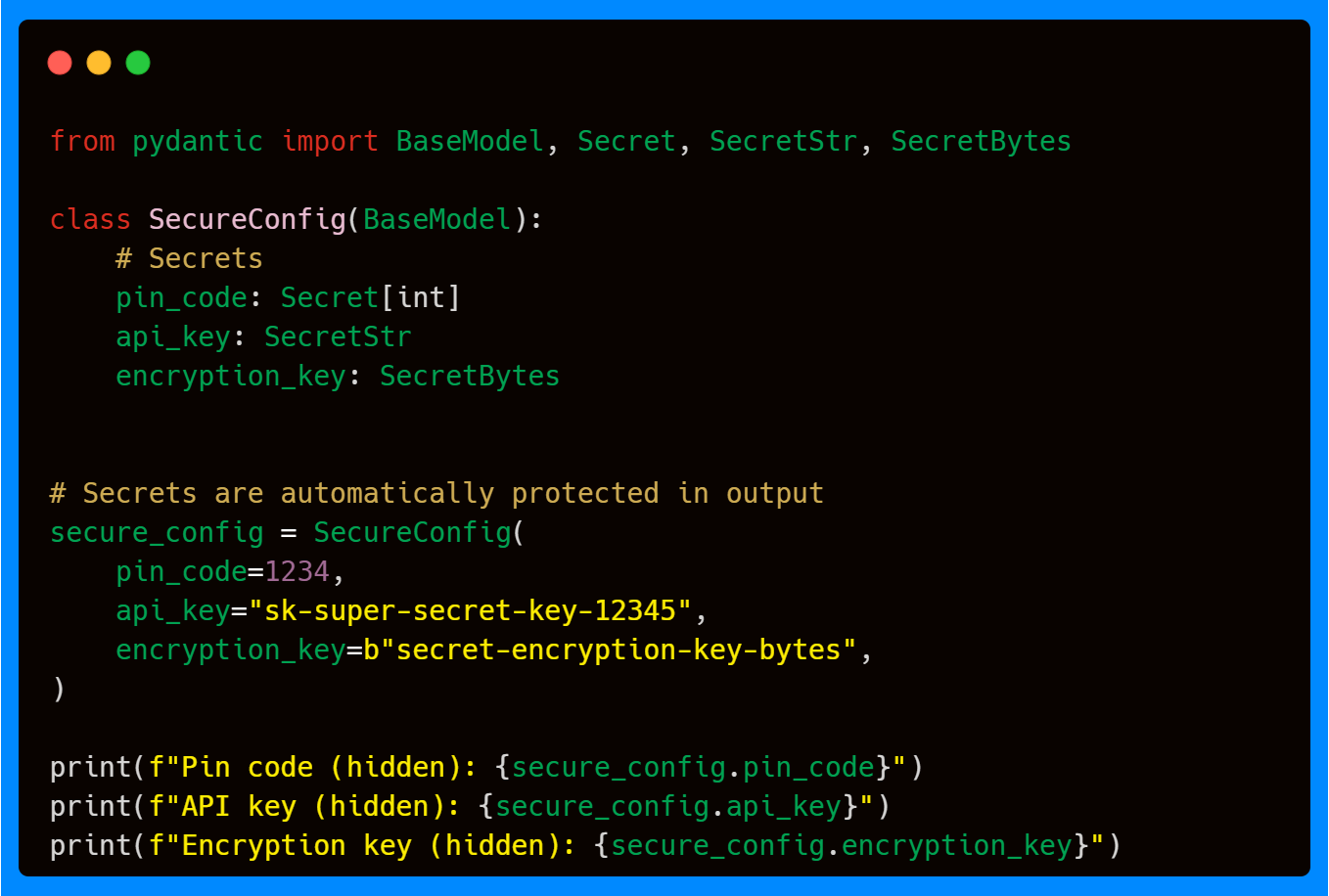

When dealing with API keys, database passwords, or PINs, one of the biggest risks is accidentally leaking them in logs or error traces. Pydantic provides three secret types:

SecretSecretStrSecretBytes

These behave like their underlying types, but mask their values when printed or logged.

The raw value can still be accessed via .get_secret_value(), but this separation ensures secrets aren’t leaked by accident. This is invaluable when running ML pipelines in production, where logs are often centralized and monitored.

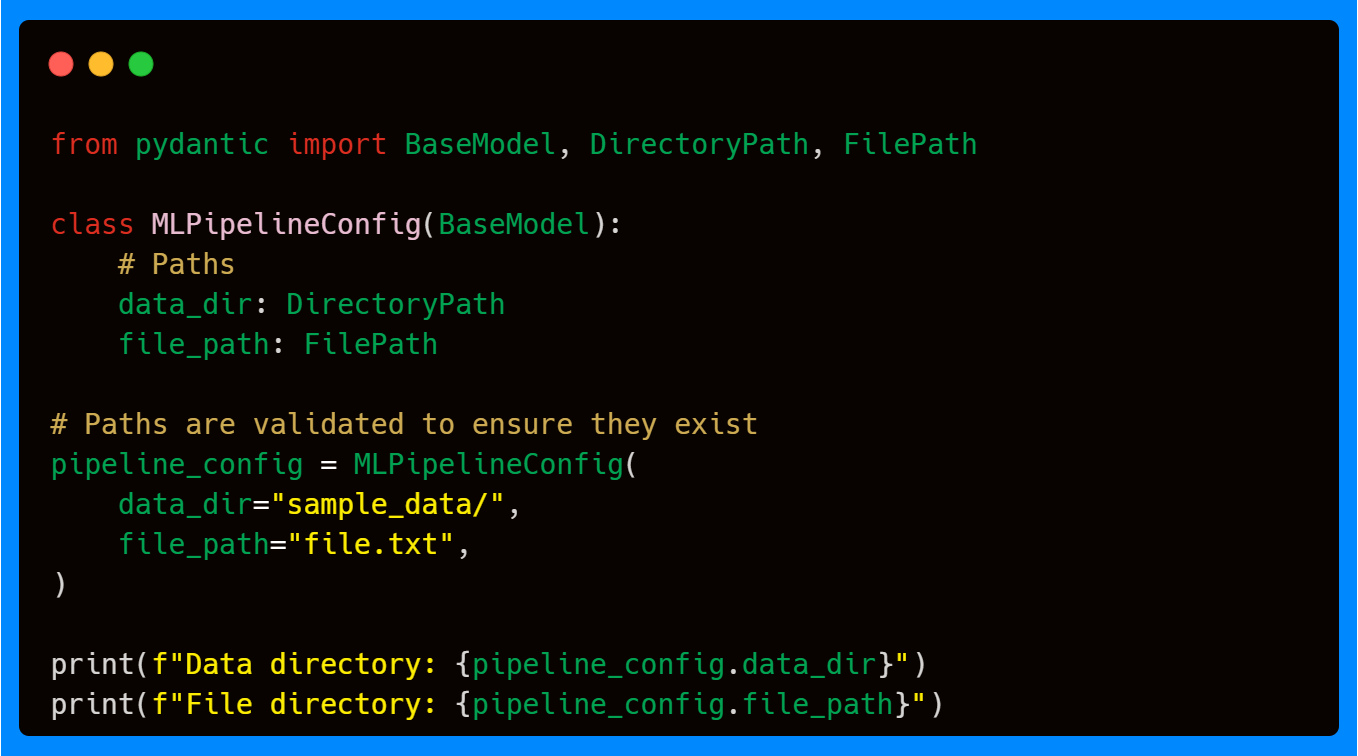

FilePath and DirectoryPath: Validating File System Inputs

Paths are everywhere in data science: datasets, checkpoints, model weights. Using FilePath and DirectoryPath enforces that these paths actually exist.

This ensures you don’t waste hours debugging because your model is pointing to a missing file or a mistyped directory.

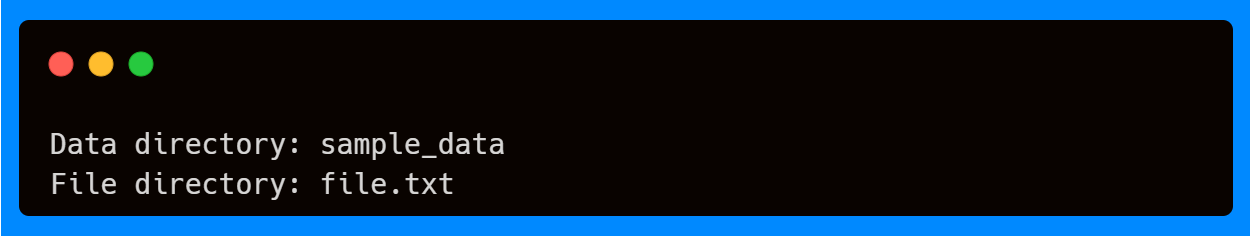

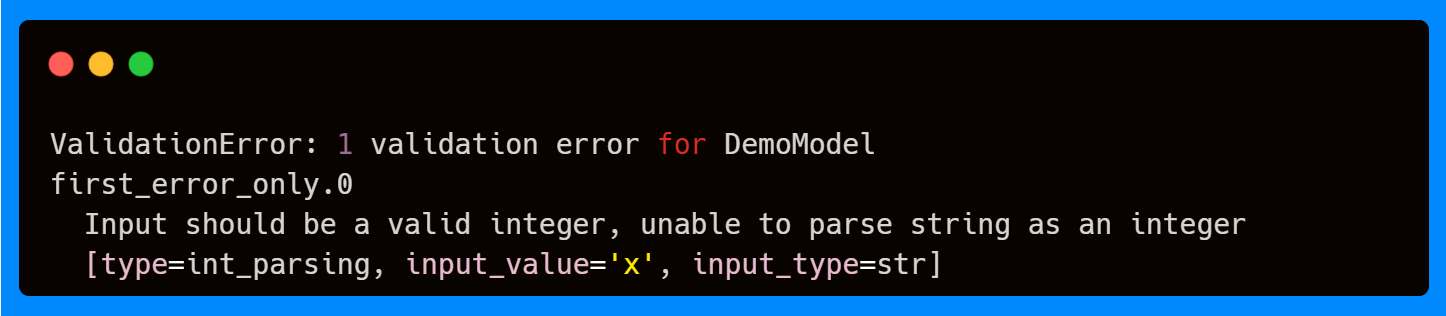

FailFast: Stop Validation Early

By default, Pydantic collects all validation errors and reports them together. But sometimes you want it to fail immediately at the first error for performance reasons. That’s where FailFast comes in.

This is especially useful when validating large datasets where continuing after the first error adds unnecessary overhead.

These custom types act like guardrails: they prevent small issues (like leaking secrets, missing files, or misconfigured paths) from becoming major runtime failures later. In production ML/AI systems, these protections are worth their weight in gold.

Beyond these custom types, Pydantic also ships with an additional package of “extra types” designed for real-world domains such as payments, currencies or phone numbers.

If you're enjoying this content, consider joining the AI Echoes community. Your support helps this newsletter expand and reach a wider audience.

Pydantic Extra Types

Beyond its core library, Pydantic ships with an extra-types package containing domain-specific validators for real-world scenarios. These are especially useful in applications that interact with users’ information or payments.

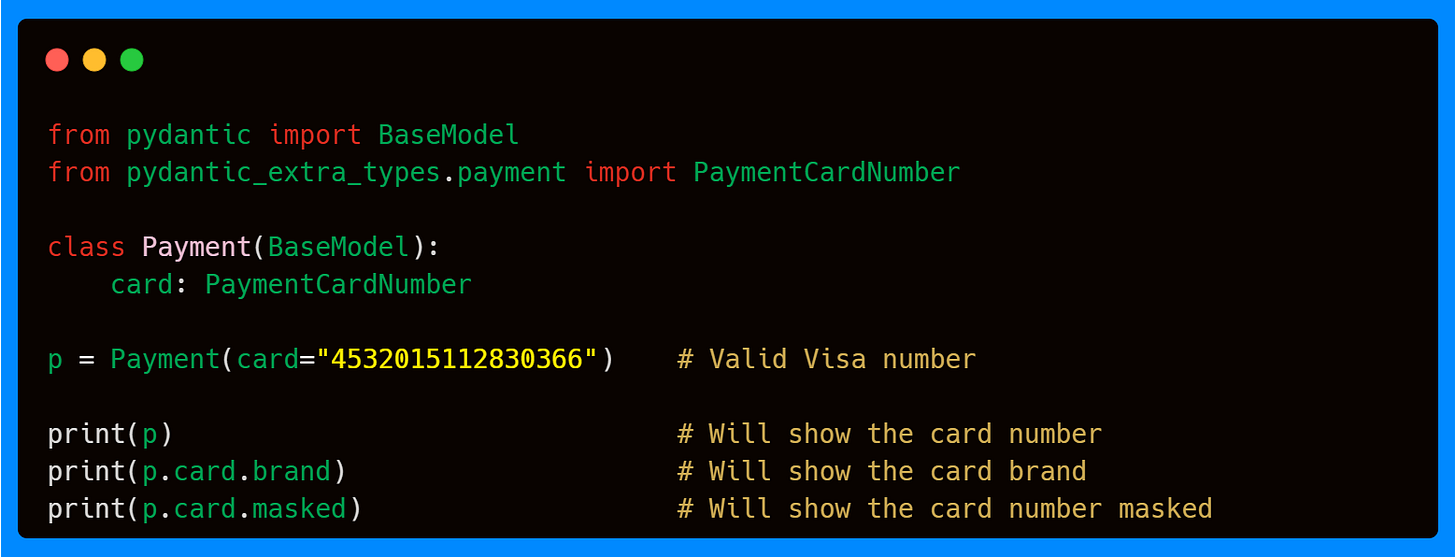

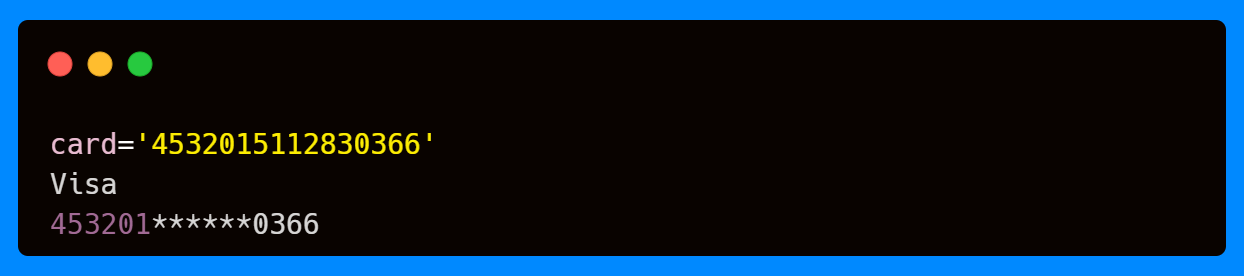

Payment

Handling payment data correctly is strictly necessary. For this Pydantic offers the type PaymentCardNumber to validate credit card numbers.

In this example, Pydantic manages to validate the card number. Note that card numbers are regulated in the financial industry, so in this case, as it is a valid Visa number, we can access the brand attribute to get the name of the issuing network or even provide a masked version of the card.

On the other side, invalid card numbers are rejected instantly. This reduces the risk of accepting malformed or mistyped payment details before they even hit your payment gateway.

Note: Under the hood, Pydantic validates credit card numbers using the Luhn algorithm—a checksum widely used in the financial industry to detect typos or invalid numbers. This doesn’t guarantee the card is active, just that it’s structurally valid.

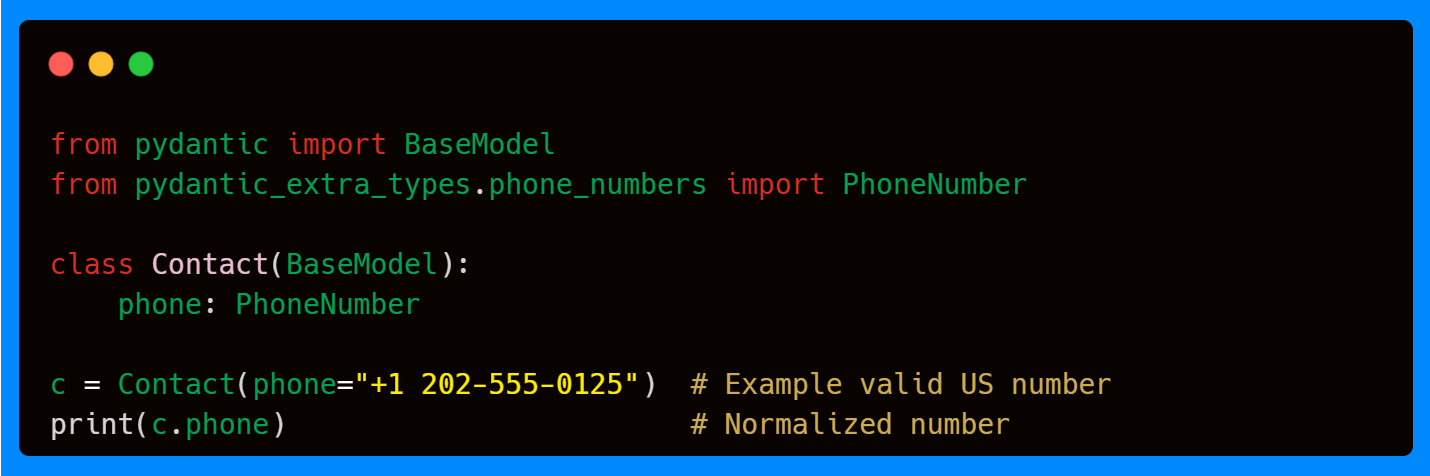

Phone Numbers

User-submitted phone numbers come in every possible format. Pydantic’s PhoneNumber type (backed by Google’s libphonenumber) automatically normalizes and validates them.

This is extremely valuable when building systems that rely on SMS authentication, contact management, or international communication.

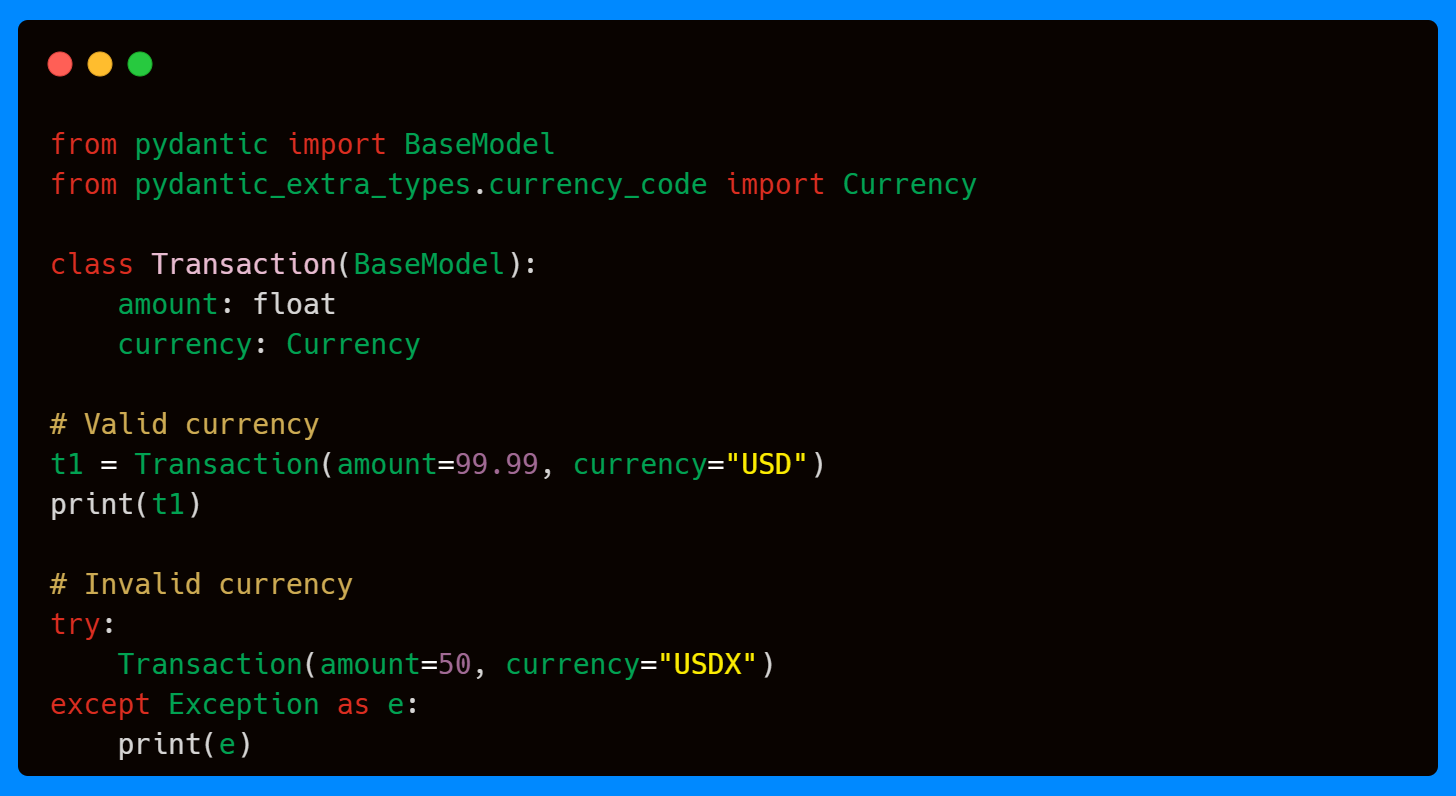

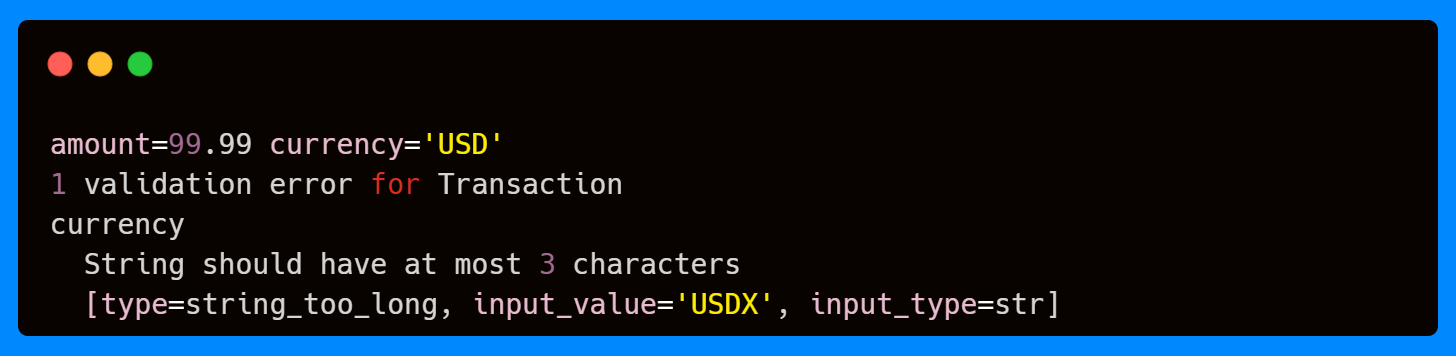

Currencies

When building financial or e-commerce systems, validating currency codes is just as important as validating amounts. Instead of relying on free-form strings, Pydantic provides currency types that follow the ISO 4217 standard, ensuring that only recognized codes (like USD, EUR, JPY) are accepted. This prevents silent errors that could arise from typos or unsupported currency values.

In this example, the model enforces that currency is a valid ISO 4217 code. "USD" is accepted, but "USDX" raises a validation error. This ensures robust validation in applications where financial correctness is non-negotiable.

Note: the

Currencytype covers all official codes, excluding testing codes and precious metals.

In addition to handling payments and user data, many modern systems must validate network-related inputs such as emails, database connections, and URLs. Pydantic provides specialized types for these too.

Network Types: Built-in Validation for Common Formats

Modern applications rarely live in isolation—they connect to APIs, databases, and cloud services. Pydantic comes with specialized network types that handle validation and normalization for these cases.

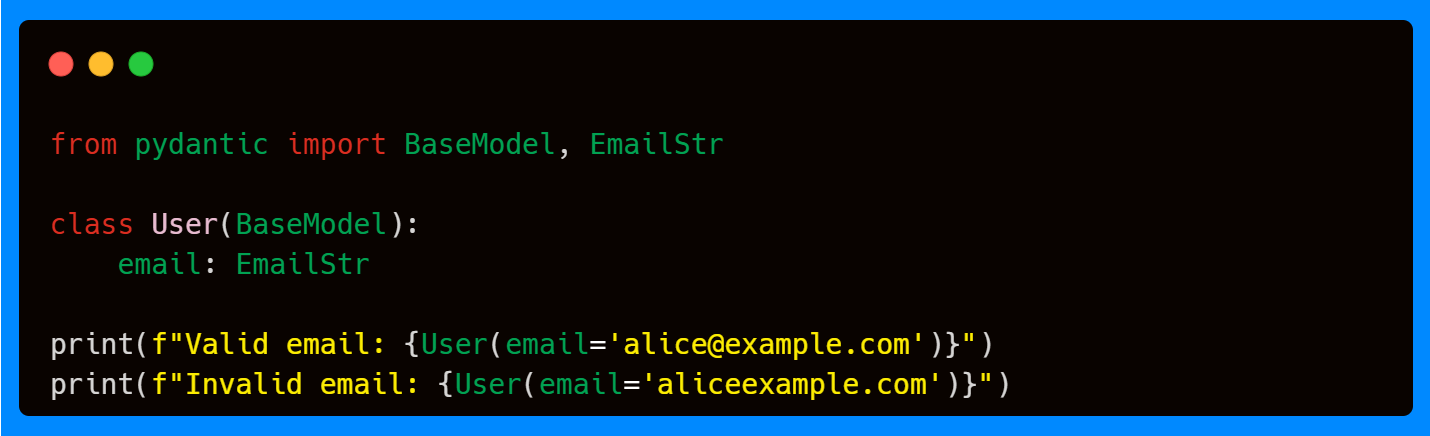

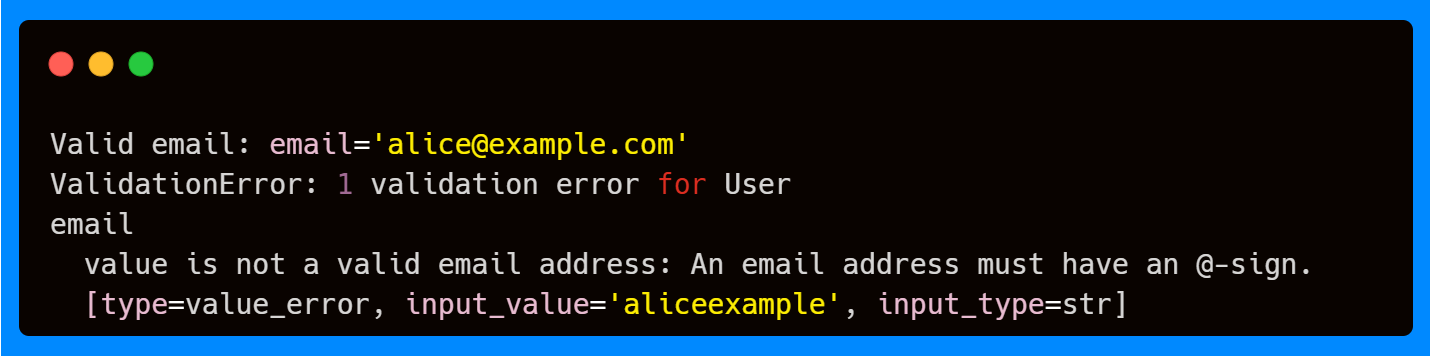

EmailStr

Email validation is very useful as it is part of our daily routine. With EmailStr Pydantic enforces that the input follows the standard email format.

Invalid addresses like "aliceexample" raise immediate validation errors—no regex debugging required.

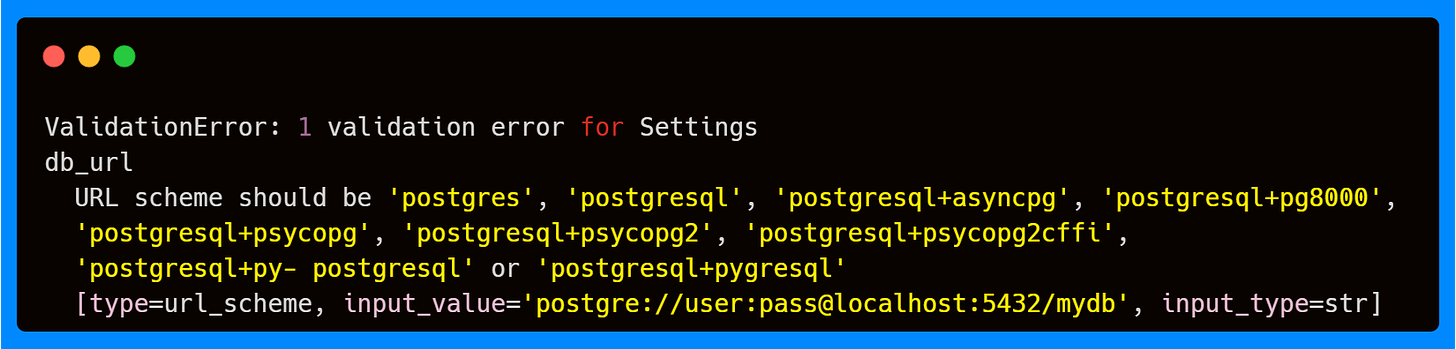

PostgresDsn

Databases often require complex connection strings. Pydantic’s PostgresDsn ensures these strings are well-formed.

Malformed DSNs are caught immediately, and Pydantic provides a detailed error message with the formats that can be accepted.

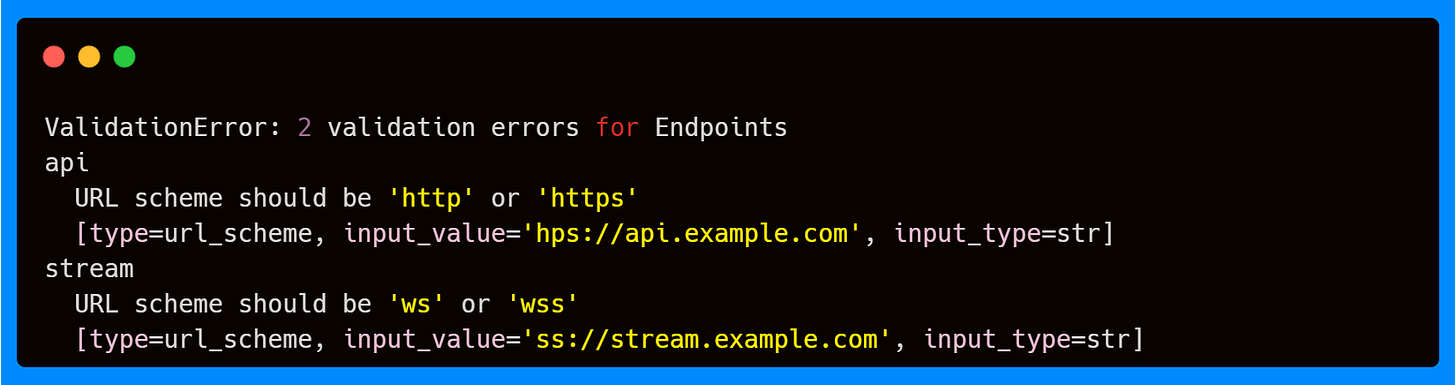

HttpUrl and WebsocketUrl

For API and streaming workloads, Pydantic validates URLs out of the box.

It enforces correct schemes (http, https, ws, wss) and valid hostnames—so your configs can’t silently drift into invalid values.

✅ With Custom Types, Extra Types, and Network Types combined, Pydantic transforms validation into a domain-aware safety net for modern applications. Whether you’re handling secrets, payments, phone numbers, or DSNs, these specialized types let you focus on business logic while trusting Pydantic to catch inconsistencies.

Once types are defined, the next challenge is handling the messy reality of incoming data. This is where Pydantic’s conversion modes—lax and strict—become important.

Type Conversions: Strict vs Lax Mode

Now that we've covered Pydantic's specialized types, let's explore how the framework handles type conversion—a critical aspect when processing data from diverse sources. Pydantic's type conversion system operates in two distinct modes—lax and strict— that give you precise control over how input data gets transformed into your target types. Understanding these modes is crucial for building robust data processing pipelines that handle real-world input variations.

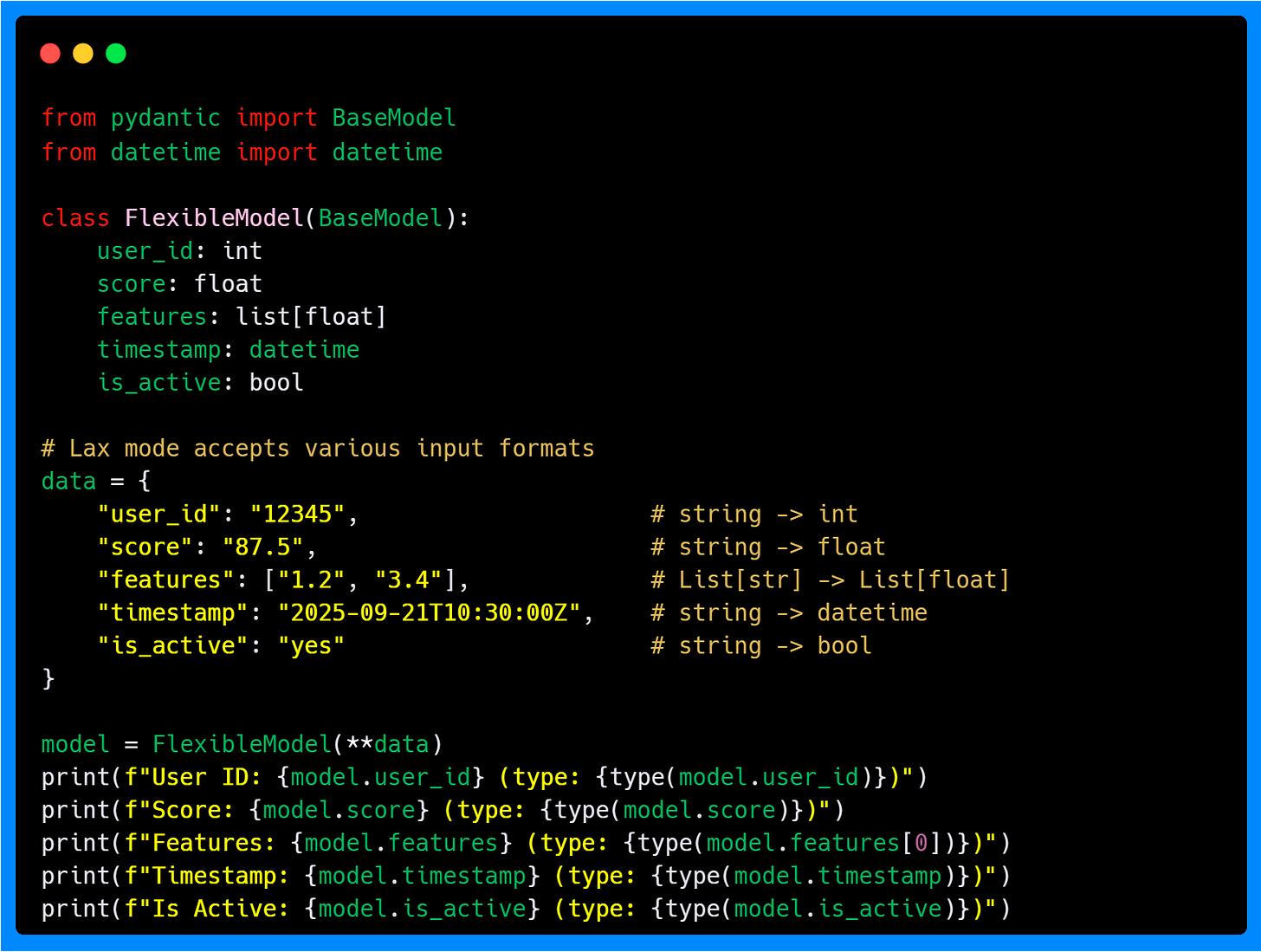

Lax Mode (Default): Flexible Type Coercion

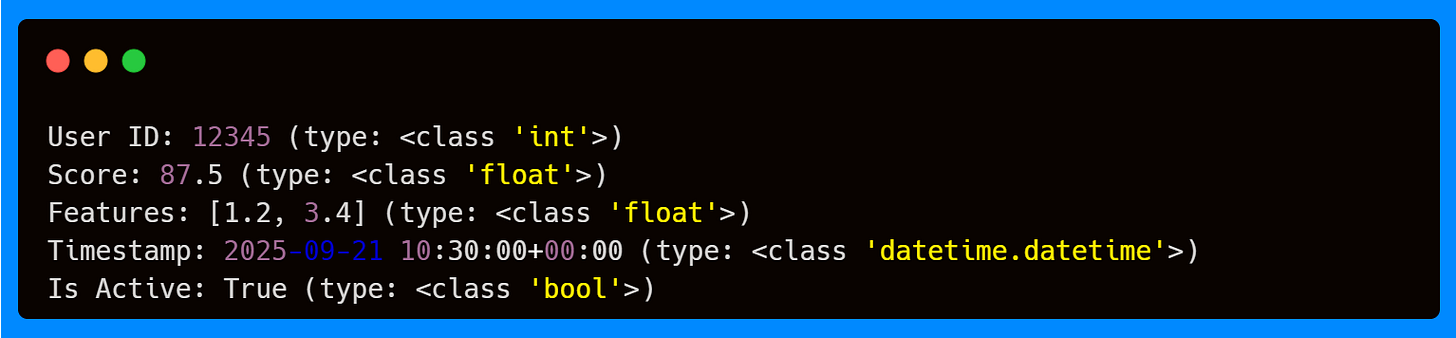

By default, Pydantic uses lax mode, which attempts reasonable type conversions to transform compatible inputs into the expected types. This flexibility is invaluable when working with data from external sources like CSVs or user inputs, where types might not perfectly match your model definitions.

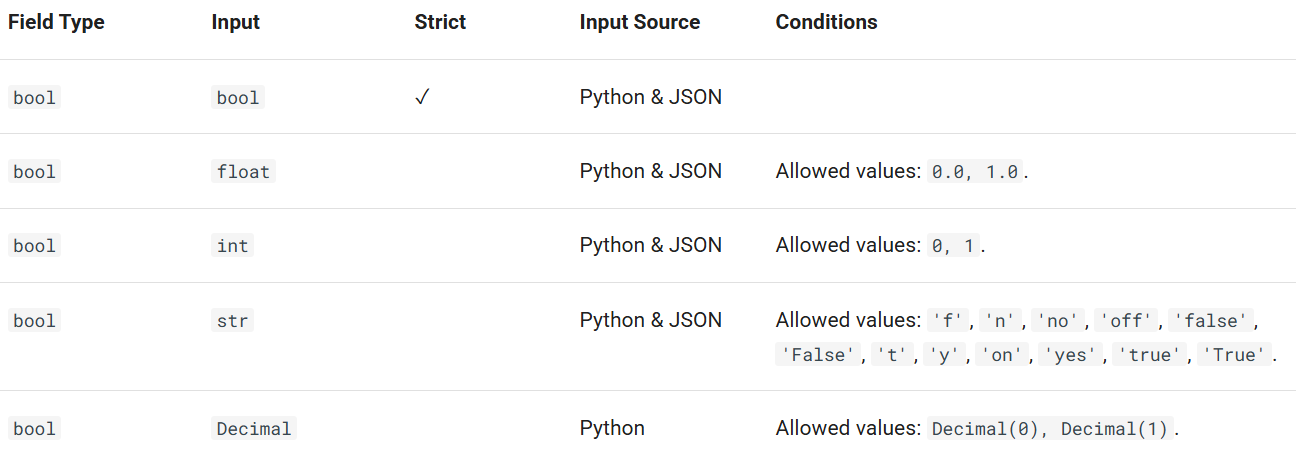

This automatic coercion handles common scenarios where data arrives in string format (like JSON or CSV) but needs to be converted to appropriate Python types for processing. Pydantic's lax mode is intelligent enough to handle edge cases like boolean conversion from various string representations. Below you can see some examples of booleans and datetimes covered by Pydantic. The complete conversion table can be found here.

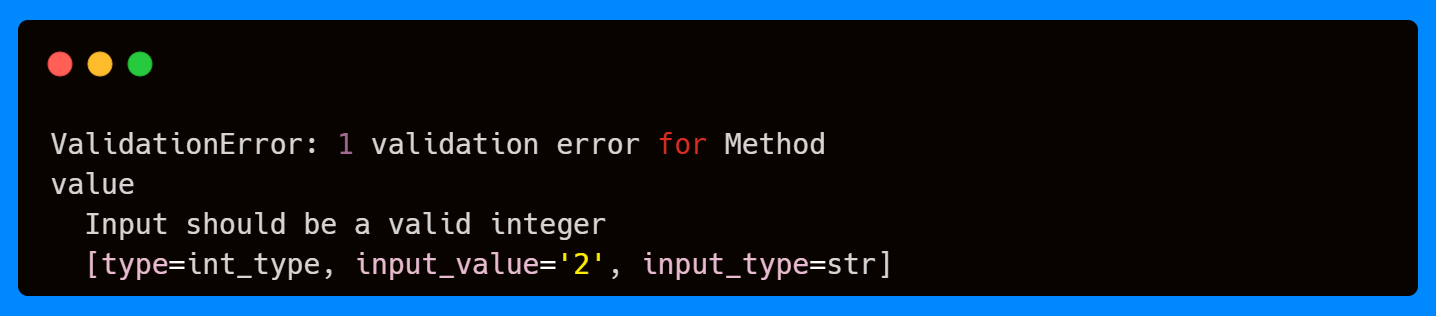

Strict Mode: Precise Type Enforcement

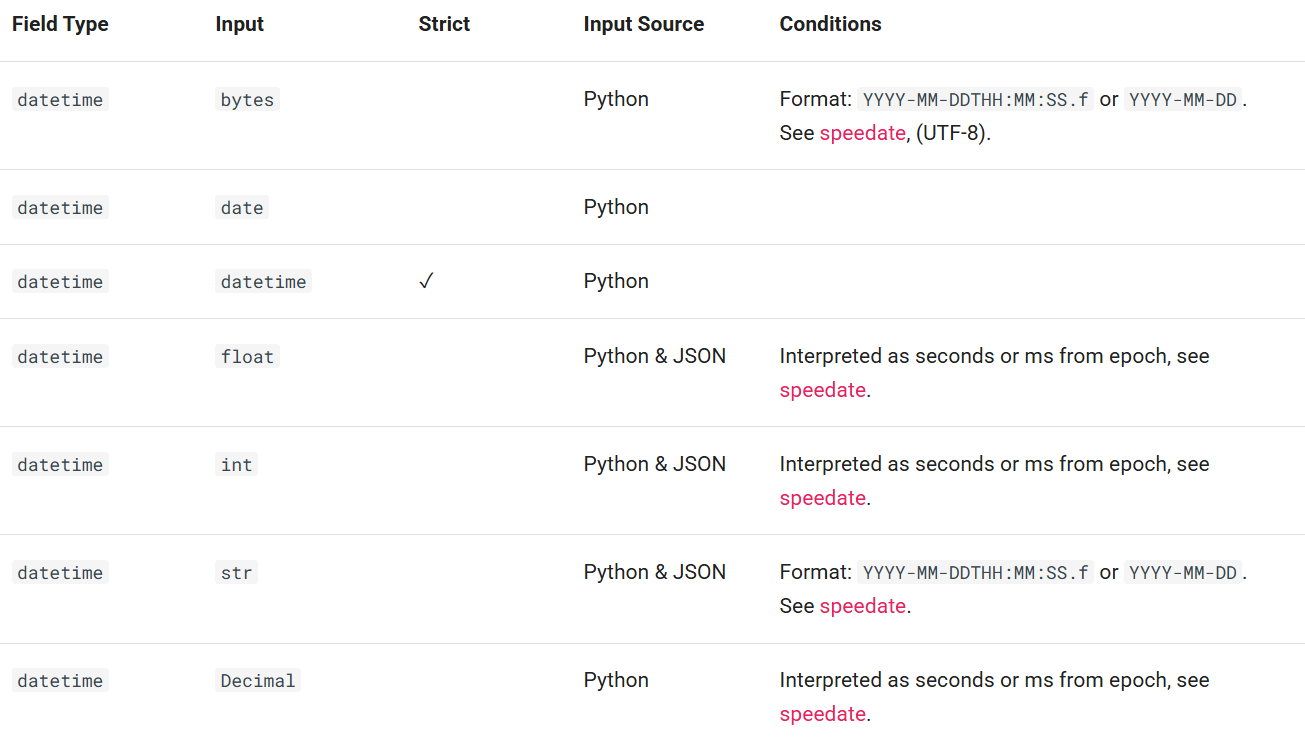

Sometimes you need strict type enforcement without any automatic conversion. This is particularly important in applications where type precision matters—for example, when working with model weights, feature vectors, or financial calculations where unexpected type conversions could introduce subtle bugs.

The strict mode can be handled in different ways. The most common one is by adding the parameter strict=True to the Field function, ConfigDict class, or validation methods, but you can also achieve strict enforcement by using the built-in Strict* types or wrapping a type with Annotated together with the Strict() class.

All methods enforce strict type checking and will raise a validation error if the input does not exactly match the expected type.

This approach is particularly valuable in ML/AI applications where you want to be flexible with user-provided configuration values but strict with model parameters that could affect training or inference quality.

Note: Pydantic provides the following strict types:

StrictBool,StrictBytes,StrictFloat,StrictInt, andStrictStr.

After validating and converting data, the next step is often sending it back out—to logs, APIs, or storage. That’s where serialization comes in.

Serializers and Deserializers

Moving beyond type conversion, serialization becomes crucial when your models need to communicate with external systems, persist data, or transform complex objects into transmittable formats. Pydantic's serialization system provides powerful tools for controlling how your models are converted to and from different formats. This is essential for ML/AI applications that need to save models, log data, or communicate with external systems.

Note: Pydantic uses the terms "serialize" and "dump" interchangeably. Both refer to the process of converting a model to a dictionary or JSON-encoded string.

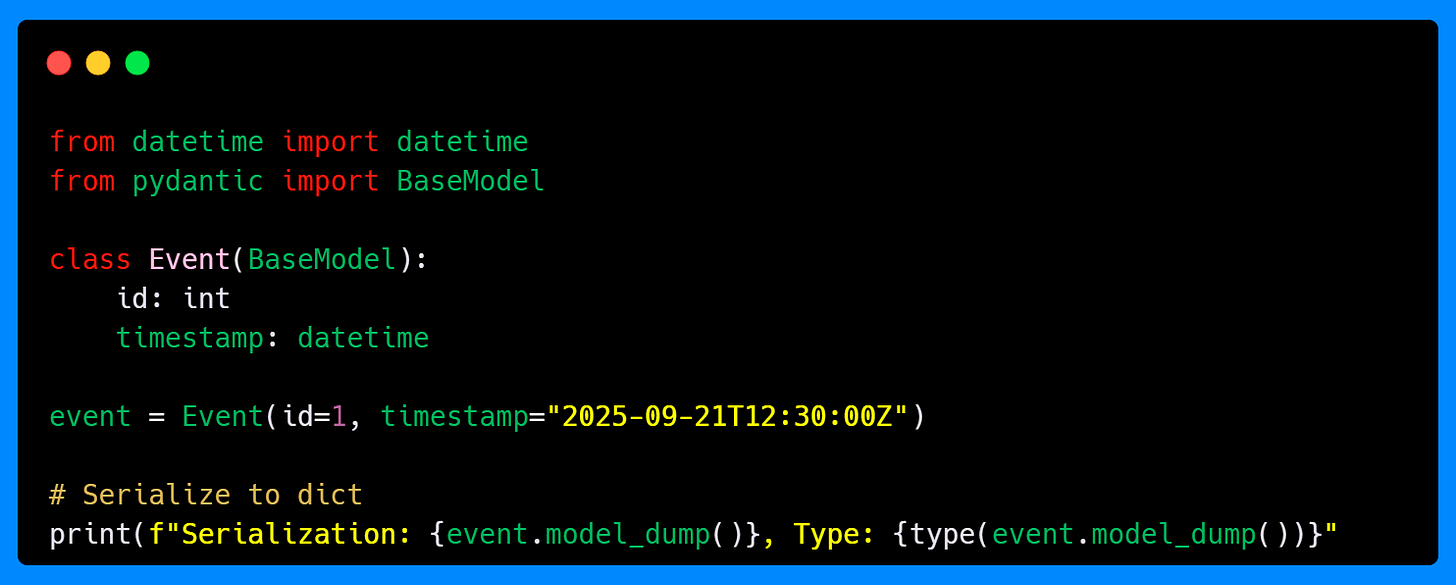

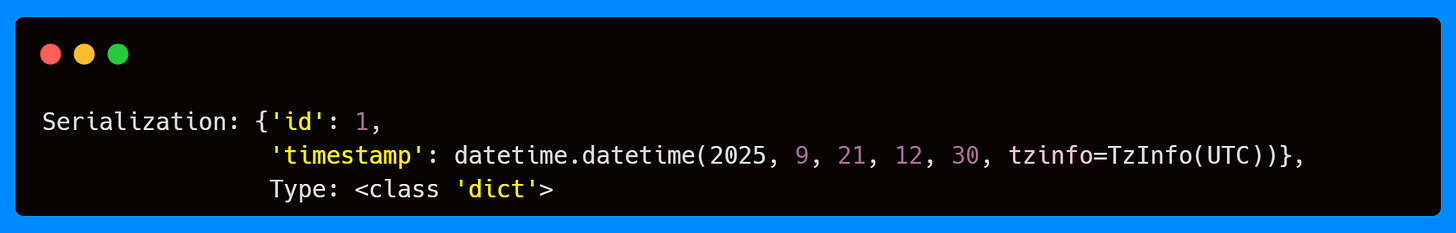

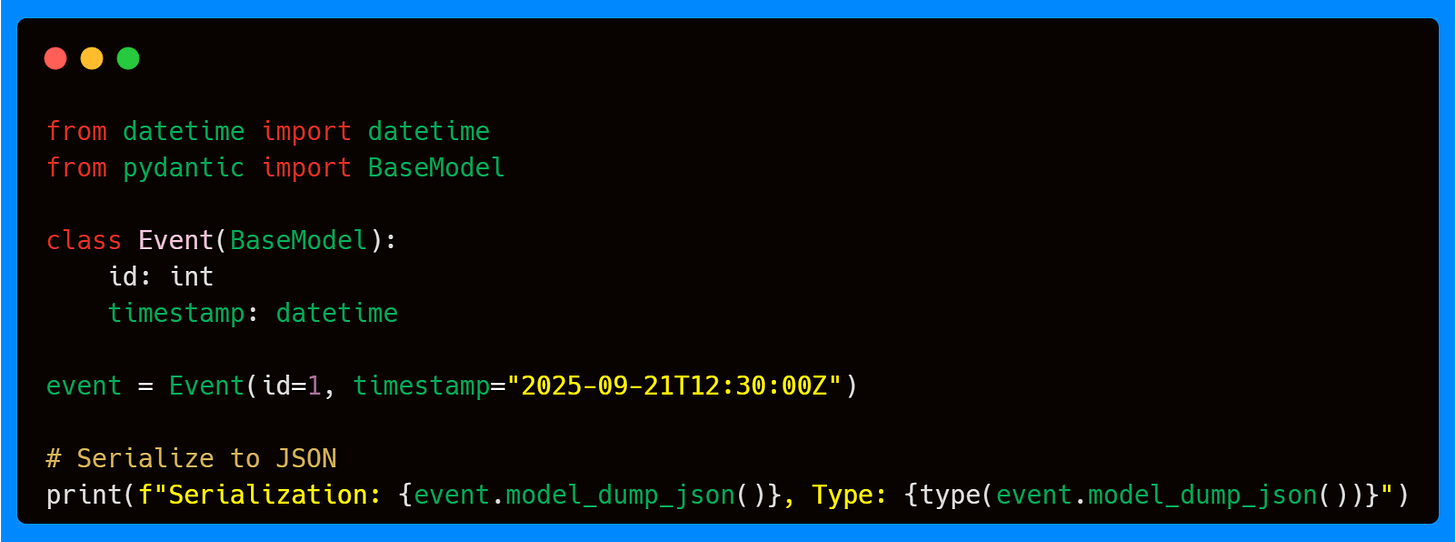

Model Dumping: The Foundation of Serialization

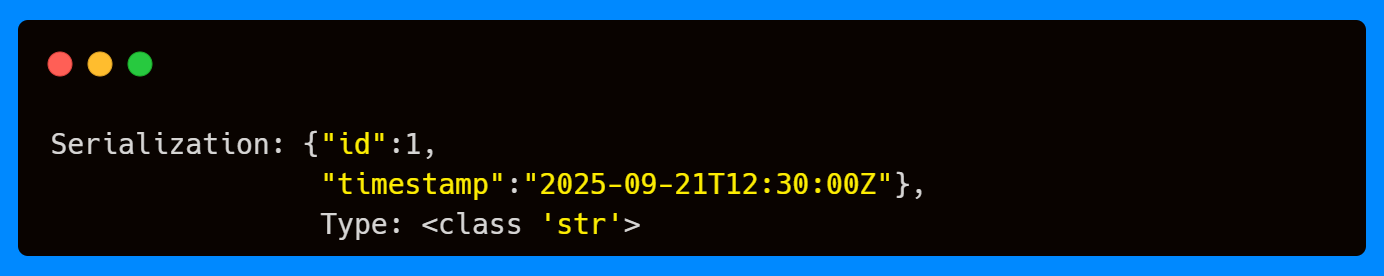

The primary way to convert a Pydantic model to a dictionary is using model_dump(). This method recursively converts sub-models to dictionaries and provides extensive control over the output format.

You can serialize a model directly to a JSON-encoded string with the method model_dump_json(). This is especially useful when sending responses from APIs, logging structured events, or persisting configurations in a lightweight format.

Together, model_dump() and model_dump_json() provide a consistent bridge between Python-native objects and external systems. Whether you’re storing experiment metadata, streaming predictions from an inference service, or passing structured payloads to a frontend, Pydantic ensures that your data can be reliably serialized.

Custom Serializers

Beyond basic model dumping, Pydantic offers sophisticated serialization customization through various serializer types, similar to custom validators we saw in Part 2 of this article series. This is particularly valuable when working with external systems that expect specific formats or when you want to streamline how your models are exported.

There are two levels of customization: field serializers and model serializers. Both default to plain mode (where your function fully replaces the default serialization), but you can also use wrap mode (where you can extend the default logic rather than replace it).

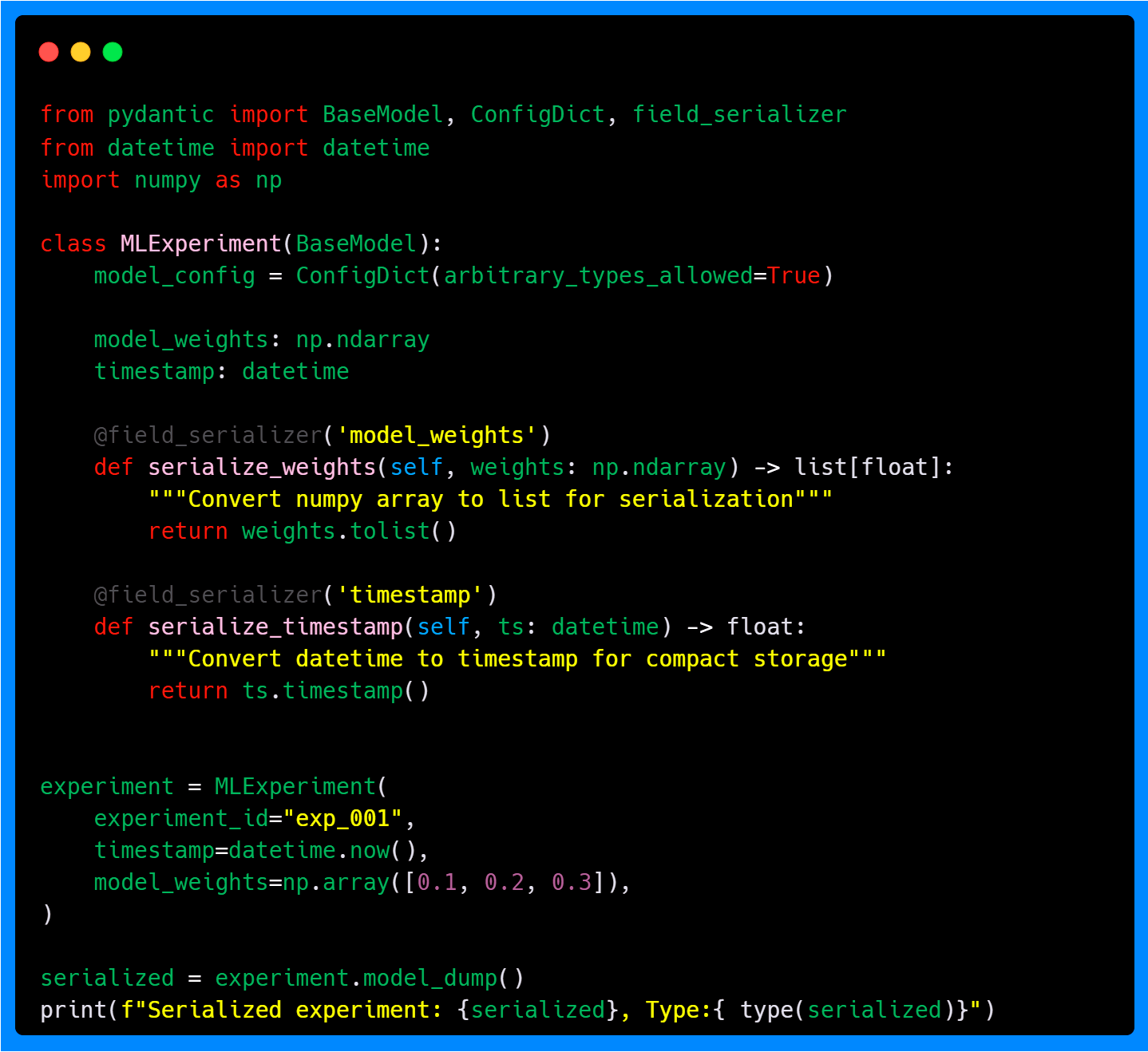

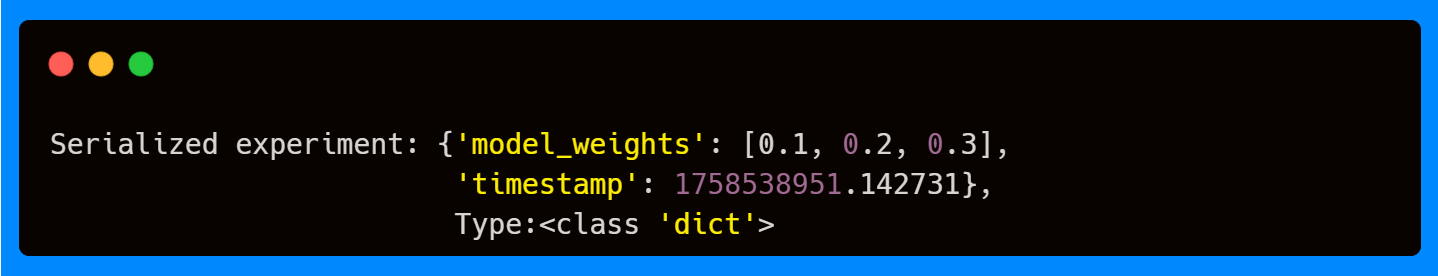

Field Serializers

Field serializers allow you to customize how individual fields are serialized, particularly useful for complex types that need special handling.

Note that we add the ConfigDict arbitrary_types_allowed parameter as NumPy arrays don't have built-in Pydantic support. Here, NumPy arrays are converted into Python lists and datetimes into timestamps. The serializer functions completely replace Pydantic’s default serialization for those fields.

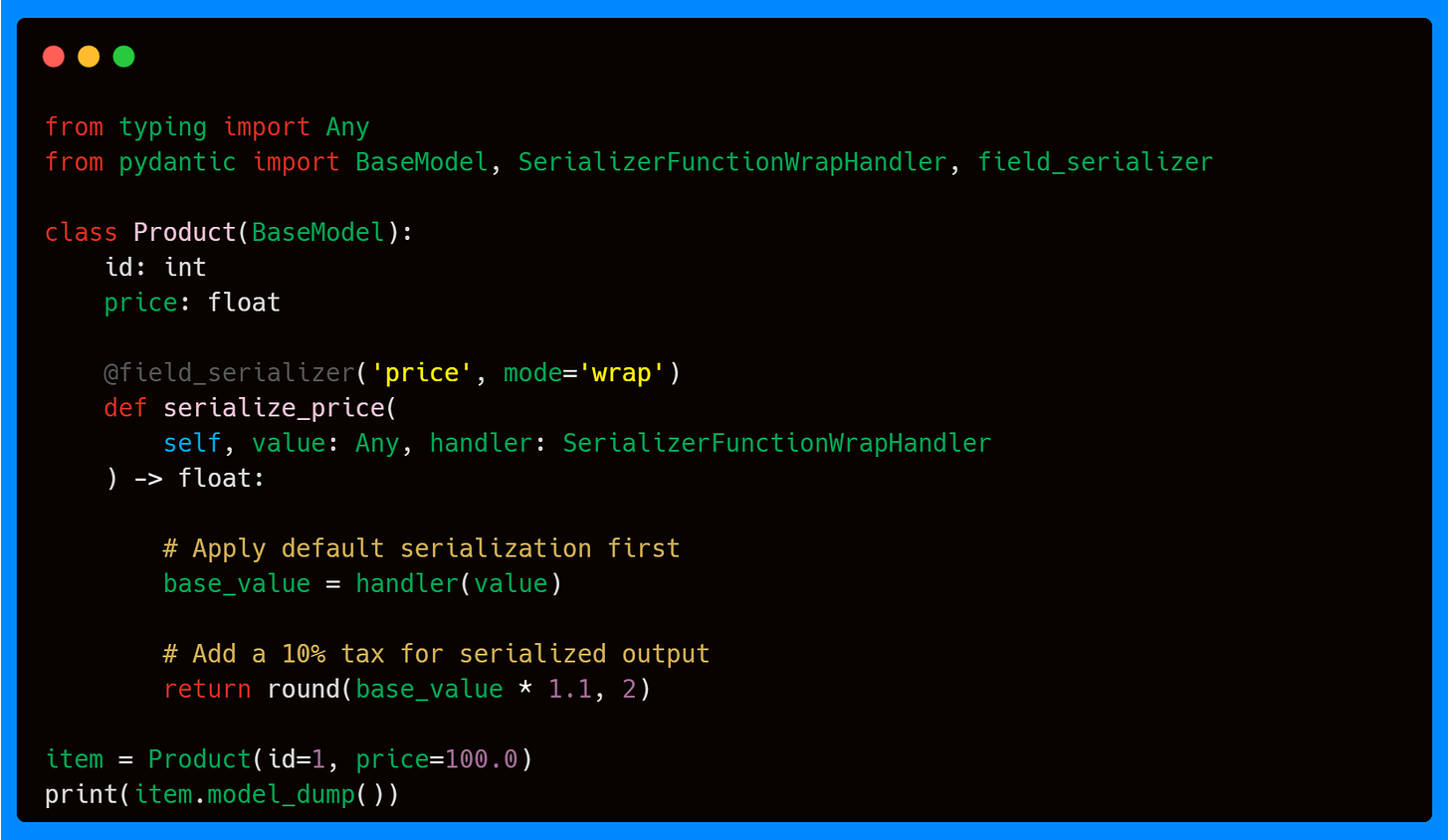

Instead of overriding serialization entirely, you may want to enhance the default logic. For instance, imagine you want to serialize a field normally, but also log or enrich it. Wrap mode makes this possible because it gives you access to Pydantic’s default handler.

Here, the wrap handler ensures that the price value is processed through Pydantic’s default serialization before applying a custom transformation (adding tax). This makes it easy to extend behavior rather than replacing it outright.

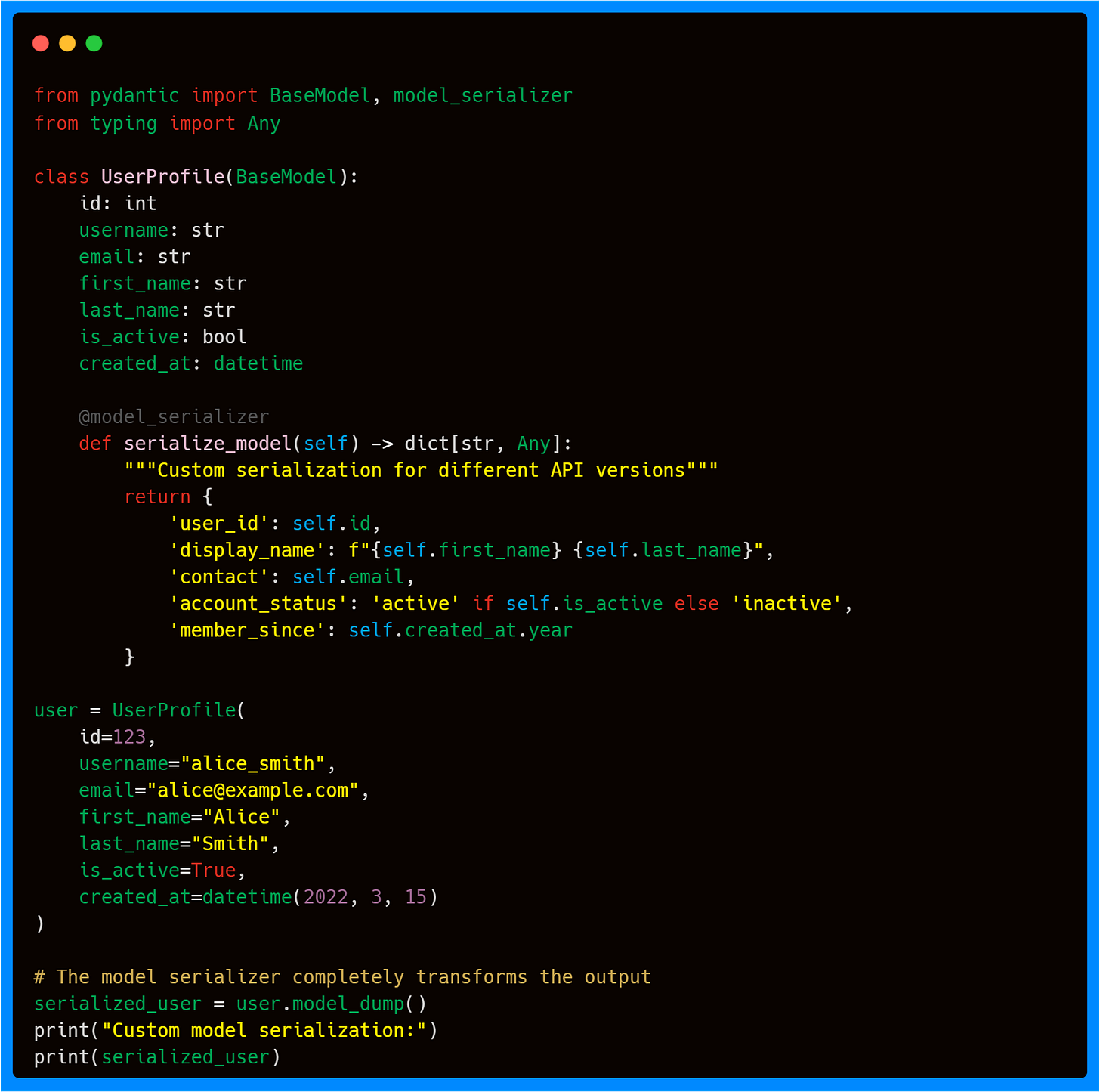

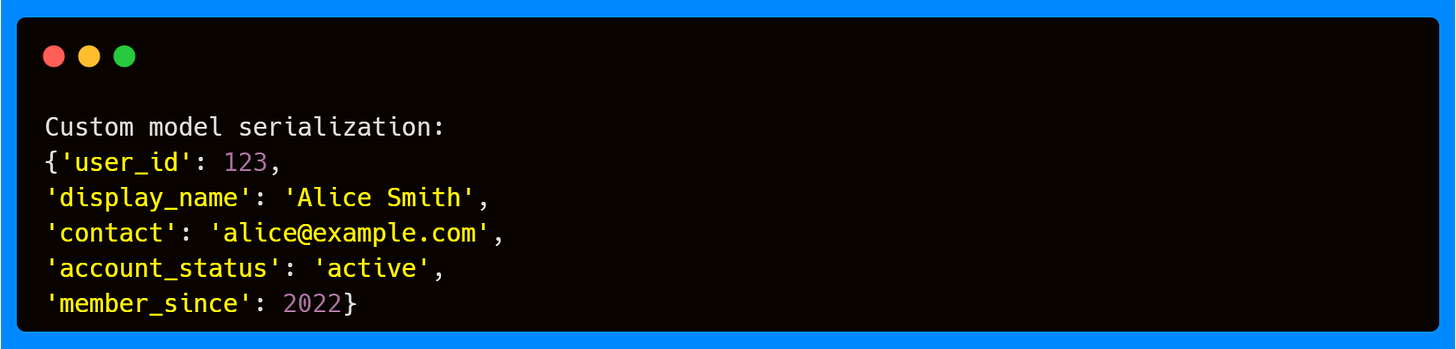

Model Serializers

While field serializers handle individual fields, model serializers let you transform the entire model during serialization. This is particularly useful for creating different "views" of your data or adapting to various API specifications.

The model serializer above completely changes the output by renaming fields, combining others, and even formatting values differently. This kind of transformation is useful for presenting consistent responses across different APIs without modifying the internal model structure.

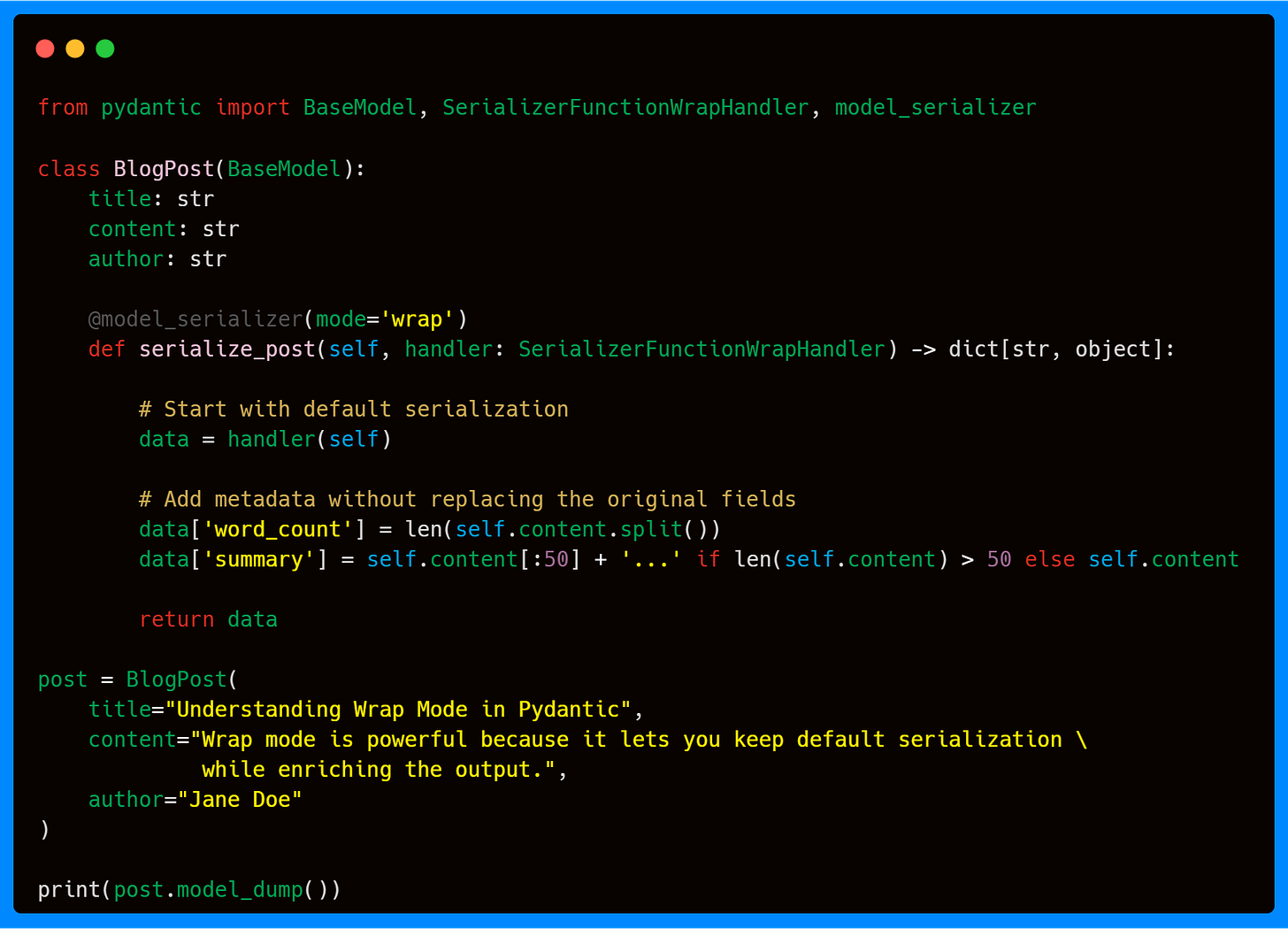

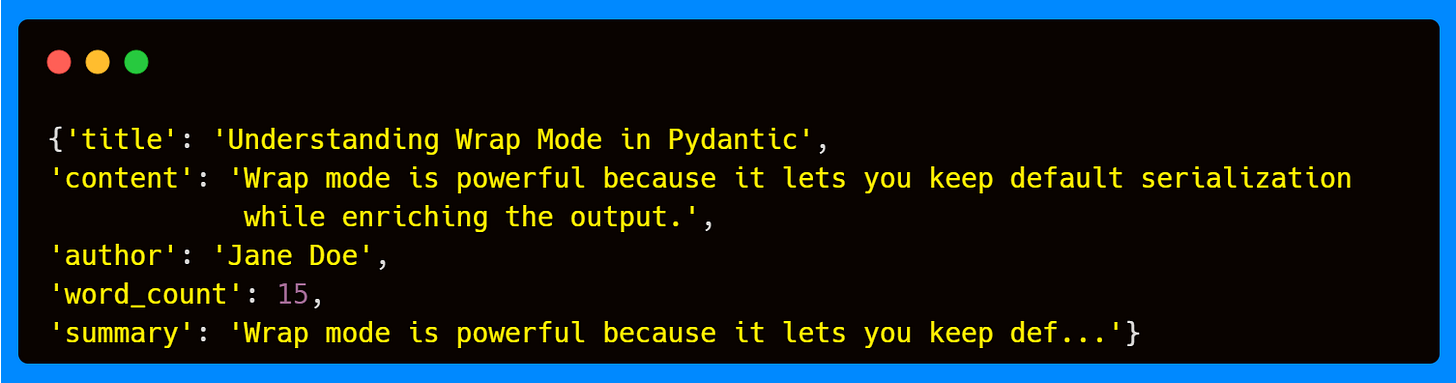

Wrap mode can also work at a model level and is ideal when you need to augment serialization while preserving most of the model base behavior.

Here, the handler first delegates serialization to Pydantic, ensuring the base structure remains intact. We then extend the output with computed fields like word_count and summary. This makes wrap mode perfect for cases where you want to preserve consistency but also enrich your data with extra insights.

✅ With these examples, we’ve now covered both field and model serializers in both plain and wrap modes. Plain mode gives you full control, while wrap mode lets you enhance default behavior without discarding it—giving you the best of both worlds, in the same way as the custom validators.

Finally, let’s look at how Pydantic handles situations where data can take multiple valid forms—unions.

Unions

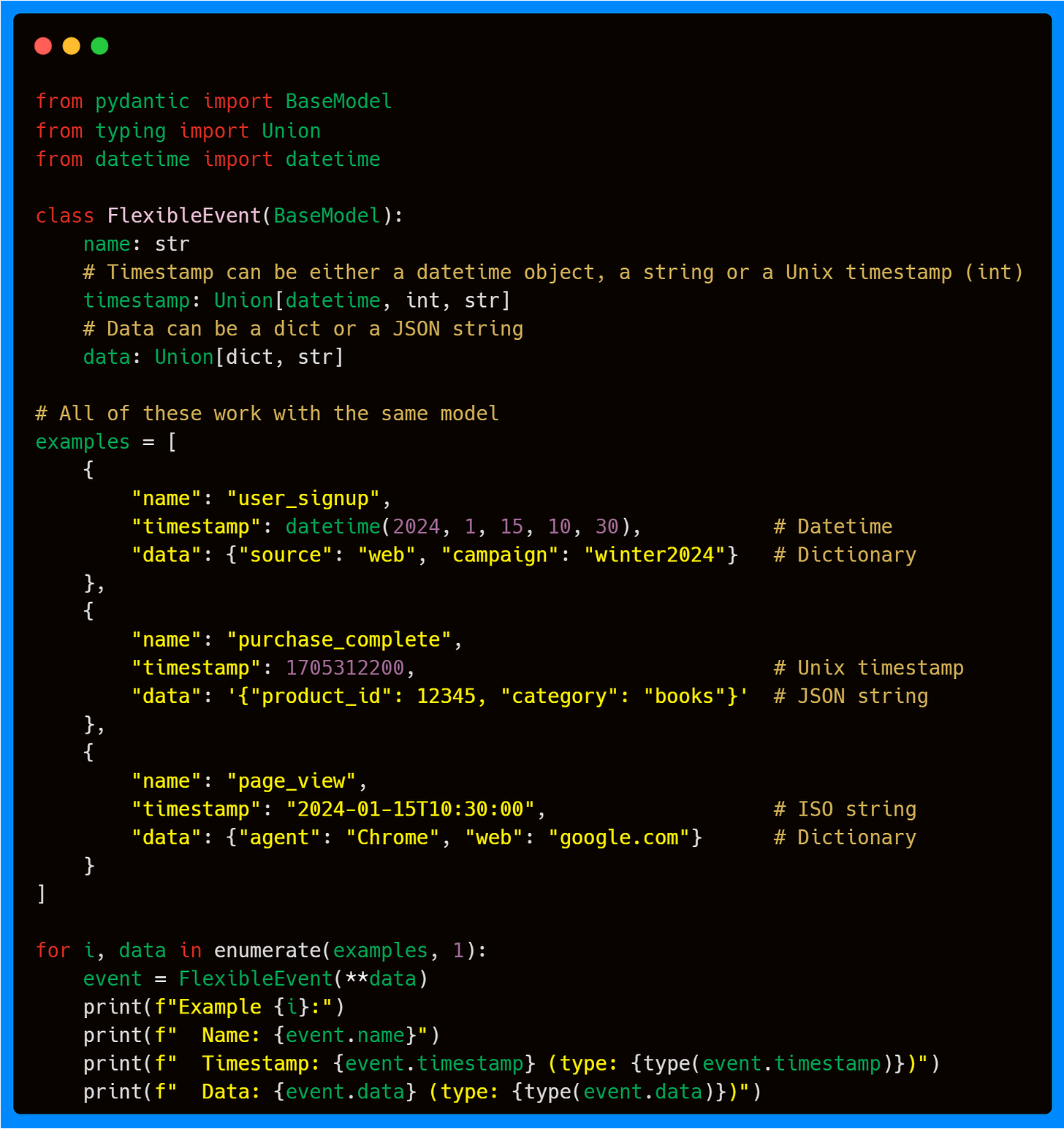

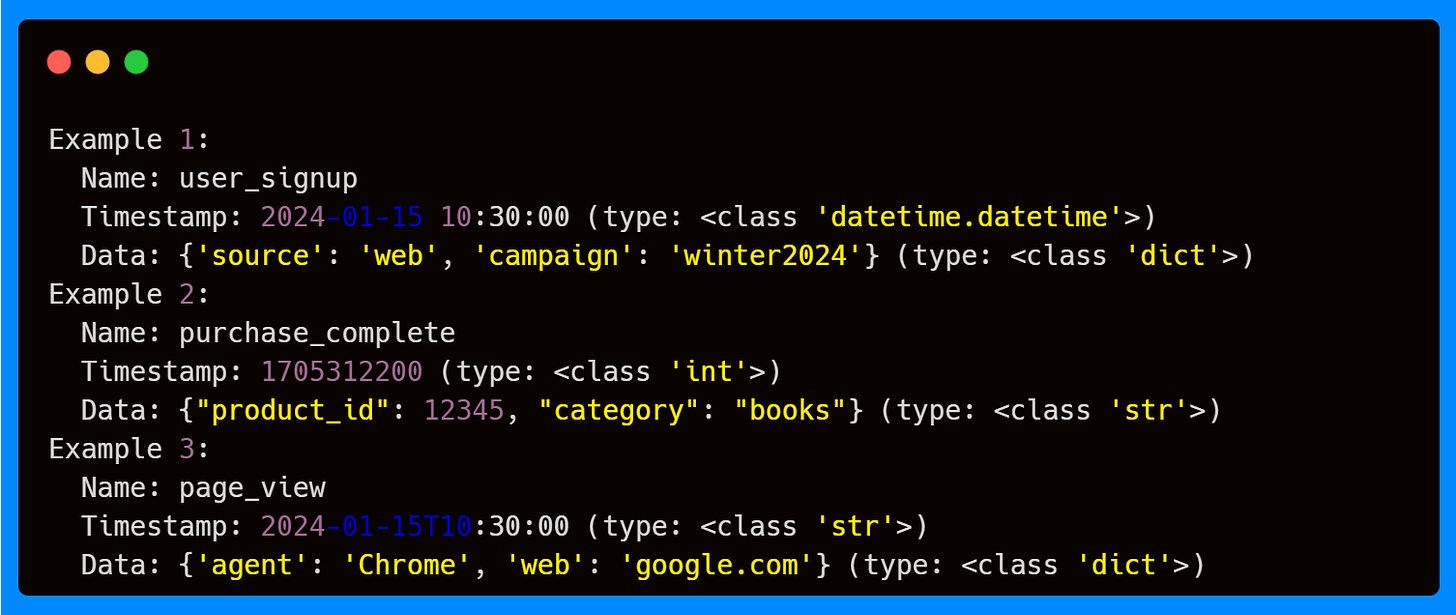

Moving from serialization to type flexibility, unions represent one of Pydantic's most powerful features for handling diverse input formats—a common challenge in ML/AI applications where data might come from multiple sources with different schemas. Unions allow a single field to accept multiple types, with Pydantic intelligently determining which type fits the input data.

Basic Union Types

Unions are particularly valuable when building systems that need to handle legacy data formats alongside new ones, or when integrating with multiple external APIs that return similar but differently structured data.

Pydantic automatically attempts to validate the input against each type in the union, using the first successful match. This makes your APIs more forgiving while still maintaining type safety.

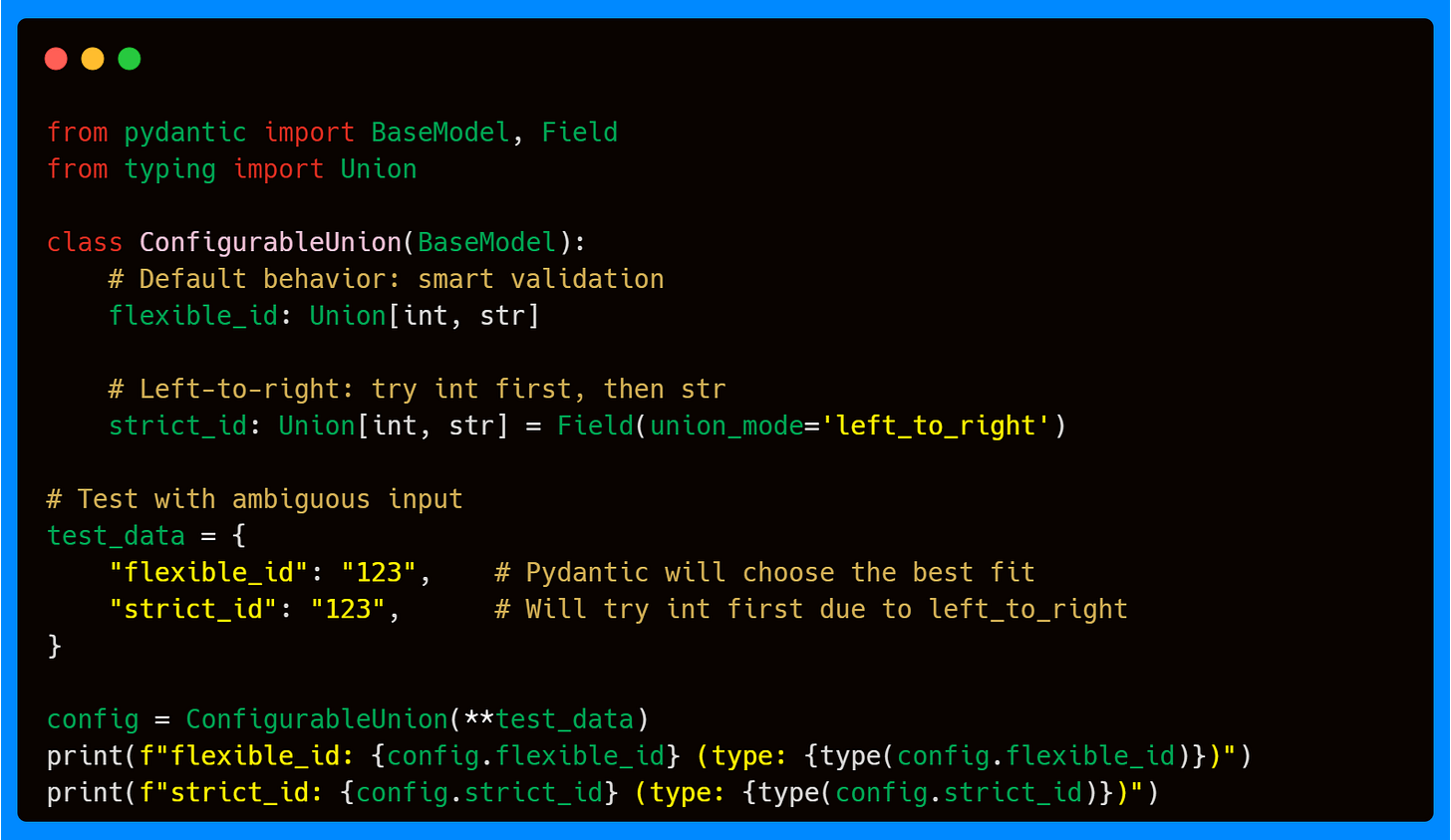

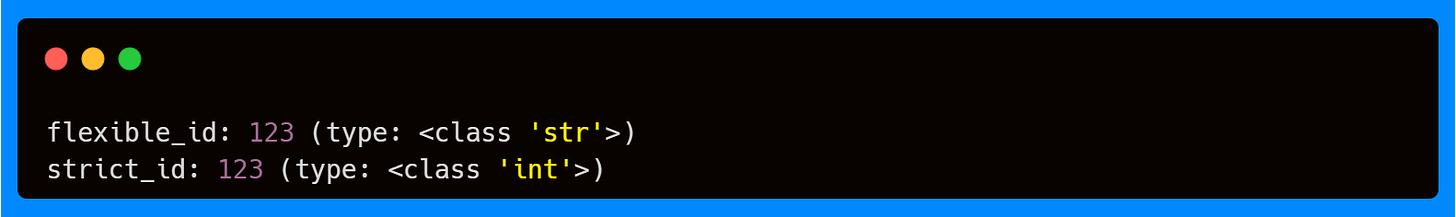

Union Mode Control

By default, Pydantic uses "smart" union validation, attempting types in a specific order. However, you can control this behavior using Field with union mode parameters for more predictable results.

Here, the same string "123" could fit both int and str. In default smart mode, Pydantic keeps it as a str because it matches naturally without coercion. In left_to_right mode, Pydantic tries the first type (int) and succeeds by coercing the string into an integer. This gives you more control over how ambiguous data should be resolved.

Discriminated Unions

Sometimes your data can take multiple shapes, but each shape can be identified by a specific field (a “tag”). This is where discriminated unions (also called tagged unions) come in.

In API design, this concept maps directly to OpenAPI discriminators. The discriminator is a special field that tells consumers (and tools like code generators) which schema is in use. This creates a form of data polymorphism, where the same field in your model can represent different object types depending on the discriminator value.

Note: OpenAPI is an open standard for describing RESTful APIs in a structured way, usually in JSON or YAML. It serves as a blueprint that defines endpoints, request parameters, and response formats, and tools like Swagger UI can turn it into interactive documentation. Frameworks such as FastAPI automatically generate an OpenAPI spec from your Pydantic models, so your validation rules also become part of your API contract. In the context of discriminated unions, Pydantic models can generate OpenAPI schemas with a discriminator field, making APIs both machine-readable and developer-friendly.

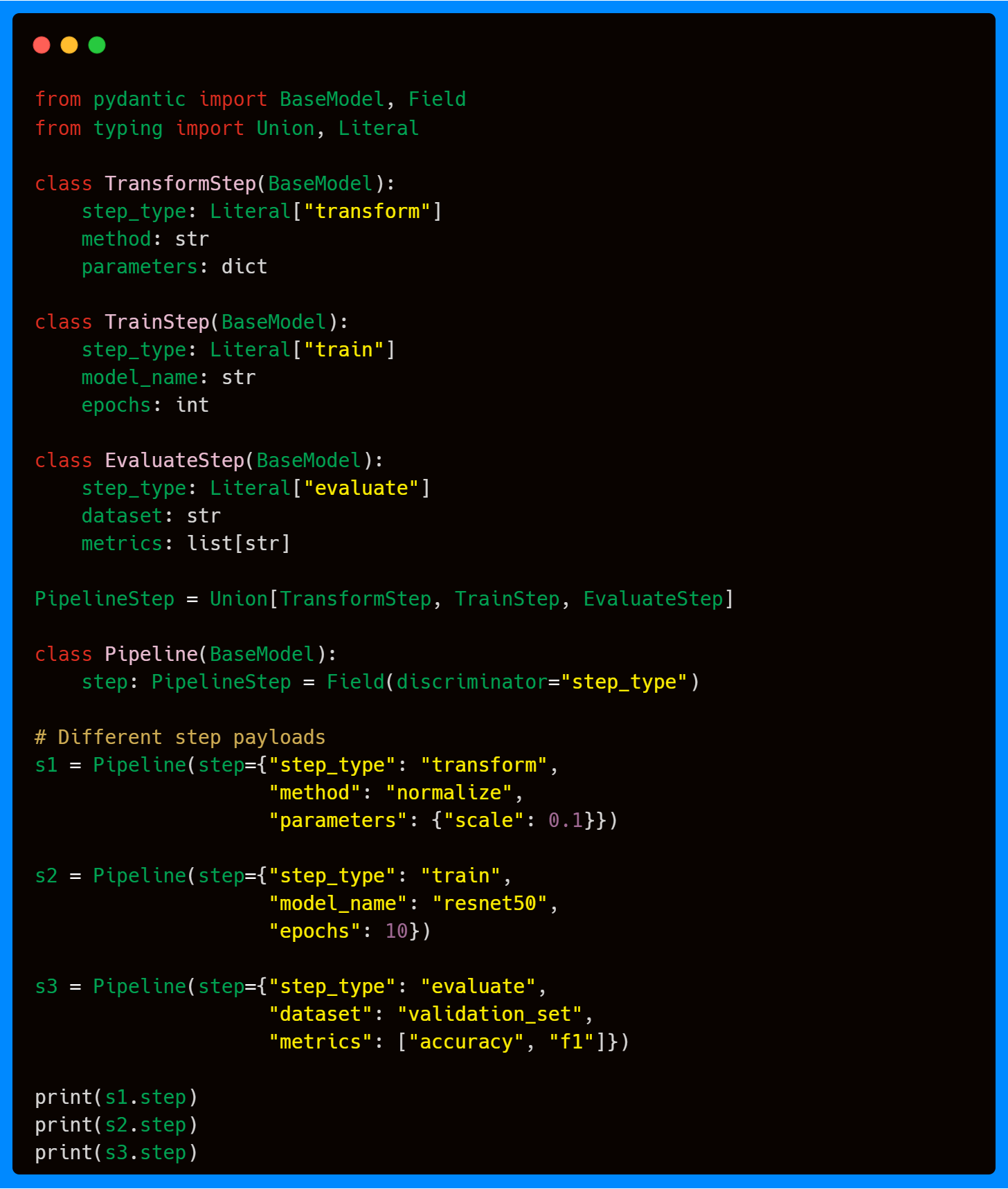

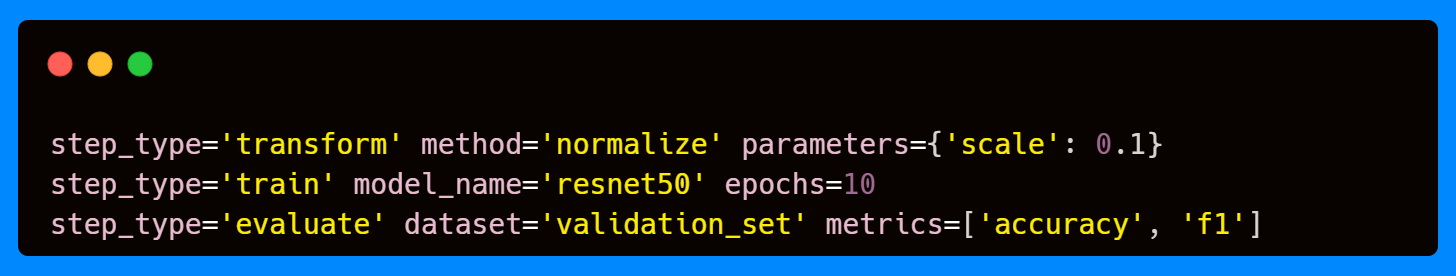

Let’s say we’re building an ML workflow system where each step can be a transform, a train, or an evaluate step.

Here, the step_type field acts as the discriminator. Depending on whether it’s "transform", "train", or "evaluate", Pydantic will instantiate the correct model. This avoids guesswork and makes validation strict while still supporting multiple shapes under one field. When possible, it’s best to rely on discriminated unions, as they provide both speed and more predictable validation results.

Conclusion

Over the course of this three-part series, we’ve walked through Pydantic step by step:

In Part 1, we introduced the essentials:

ConfigDict,Field, andSettingsConfigDict, which form the foundation of model configuration and type safety.In Part 2, we dove deeper into validation with field, model, and function validators, plus data parsing methods that ensure correctness at every layer.

In this final part, we explored advanced features: custom and extra types, strict vs. lax conversions, serialization with plain and wrap modes, and union types—including discriminated unions for handling complex, polymorphic data.

Together, these three posts provide a complete picture of how Pydantic helps you build robust, predictable, and production-ready systems, especially in ML/AI contexts where data integrity is everything.

Thank you for reading and following along with this series—I hope it helps you feel more confident applying Pydantic in your own projects. 🚀

I don’t write just for myself—every post is meant to give you tools, ideas, and insights you can use right away.

🤝 Got feedback or topics you'd like me to cover? I'd love to hear from you. Your input shapes what comes next!