AI Echoes Wrap-Up 2025

A Year of Building, Learning, and Sharing Production ML/AI Systems

Looking back at 2025, it’s been quite a ride. Seventeen articles, dozens of projects, and countless hours spent wrestling with LLMs, vector databases, and cloud infrastructure. This year wasn’t about chasing trends—it was about building systems that actually work, learning from what breaks, and sharing those lessons with you.

Let me take you through the journey.

Before the Beginning: Testing the Waters

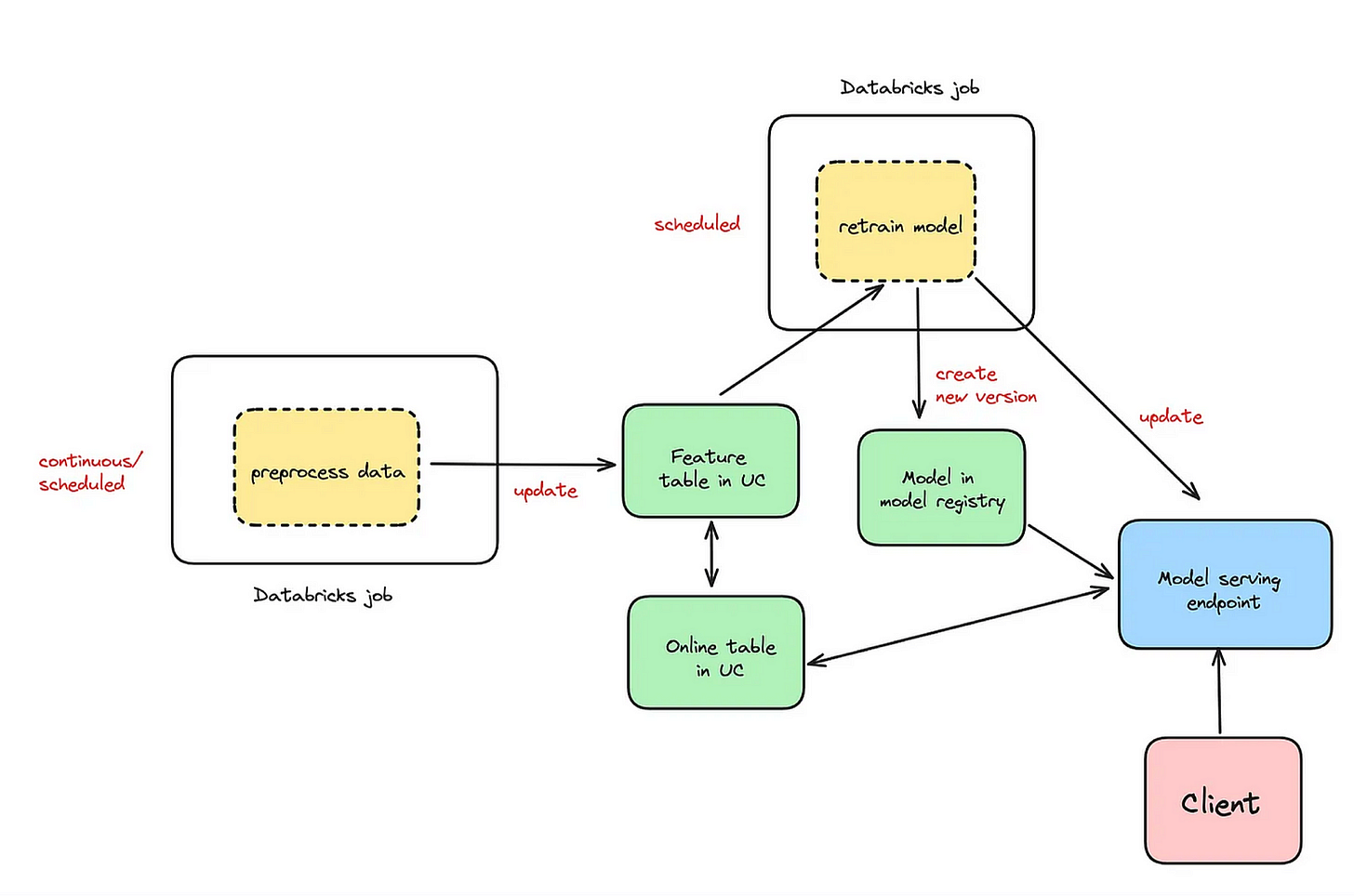

Actually, the story starts a bit earlier. In December 2024, I wrote my first guest article for Marvelous MLOps Substack from Maria Vechtomova and Başak Tuğçe Eskili : Building an End-to-end MLOps Project with Databricks. This was a comprehensive deep dive into deploying a credit default prediction model using the full Databricks stack—Feature Store, MLflow experiment tracking, Delta Live Tables, Databricks Asset Bundles (DAB) for infrastructure-as-code, A/B testing with custom wrappers, and Lakehouse monitoring for drift detection.

At the time, I was primarily writing on Medium and hadn’t seriously considered launching a newsletter. But that piece did well. It showed me there was an appetite for detailed, end-to-end production ML content that didn’t skip the hard parts. That planted the seed.

The Beginning: Collaborations That Sparked a Newsletter

The Databricks article opened doors. A few months later, other collaborations began.

In June 2025, I wrote another piece for Paul Iusztin’s Decoding AI Magazine: Your AI Football Assist Eval Guide. Using Wikipedia data about football teams, I built a complete evaluation pipeline with ZenML orchestration, MongoDB storage, and Opik for deep observability. The goal? Show how to actually measure LLM quality when there’s no clear “correct” answer. This project taught me crucial lessons about moving from simple heuristic metrics (BLEU, ROUGE) through embedding-based approaches (BERTScore, cosine similarity) to sophisticated LLM-as-a-judge methods.

Two months later, in another collaboration with Decoding AI Magazine in August 2025, came my most ambitious infrastructure piece: The GitHub Issue AI Butler on Kubernetes. A complete production AI stack with LangGraph multi-agent workflow, Kubernetes on AWS EKS, Qdrant vector search, PostgreSQL on RDS, Guardrails AI for safety, and full infrastructure-as-code with AWS CDK. This was my deep dive into cloud-native agentic systems.

The response to these collaborations, combined with the technical articles I’d been writing on Medium, Datacamp, Zilliz, CircleCI, AWS, and Qdrant, made something clear: there was an audience for production-focused ML/AI content that showed the full picture. Not just the happy path, but the failed deploys, the infrastructure decisions, and the lessons learned from building systems that actually have to work.

So in August 2025, I launched AI Echoes with a simple promise: production-focused ML/AI content with working code, real deployments, and lessons from systems that had to survive in the wild.

Going Deep on Core Tools

The Pydantic Trilogy

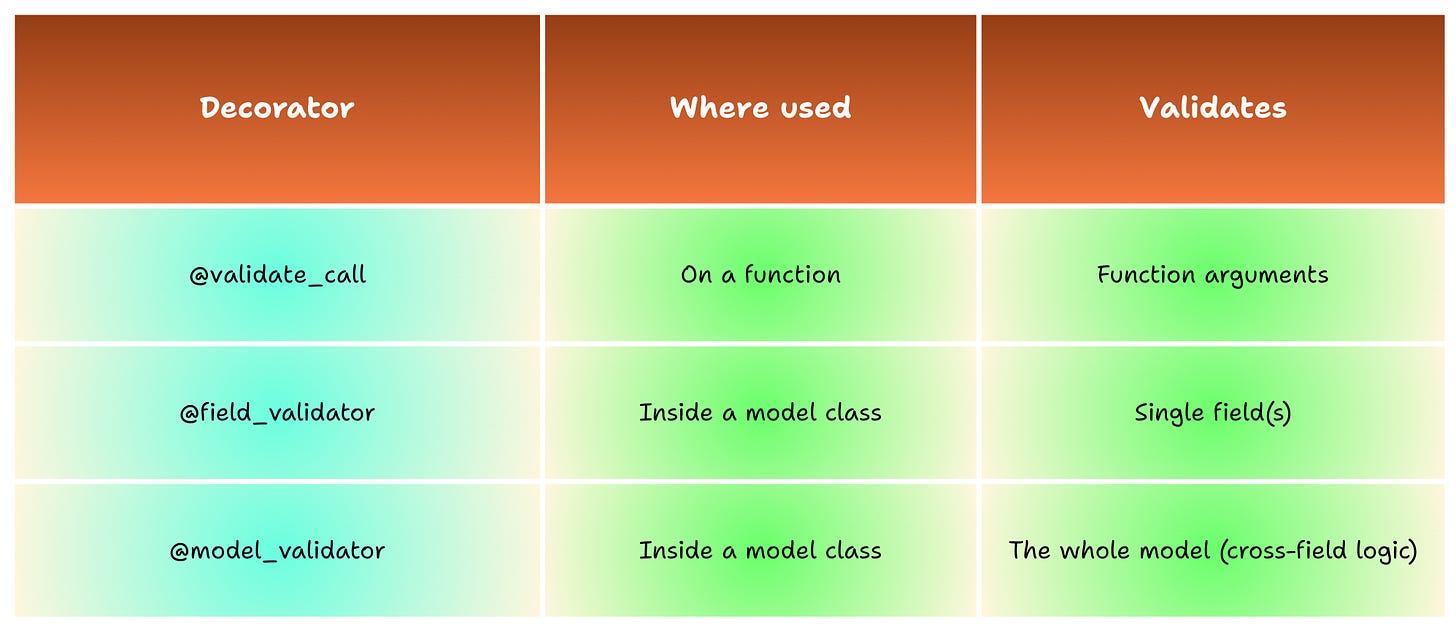

Three articles on Pydantic might seem excessive, but after building systems that rely on it daily, I realized how much depth this library deserves.

Part 1: Foundation and Core Concepts covered ConfigDict, Field definitions, and SettingsConfigDict, the building blocks that make ML pipelines robust. These aren’t just validation tools, they’re insurance against the messy reality of production data.

Part 2: Advanced Validation Techniques dove into field validators, model validators, and function validators. Validation isn’t just about catching errors, it’s about transforming chaos into structure before problems propagate downstream.

Part 3: Types, Unions, and Serialization explored custom types, network types, and the serialization patterns that make data transformation seamless. By this point, Pydantic had become less of a library and more of a mindset for thinking about data quality.

Building Production Systems

RAG That Actually Works

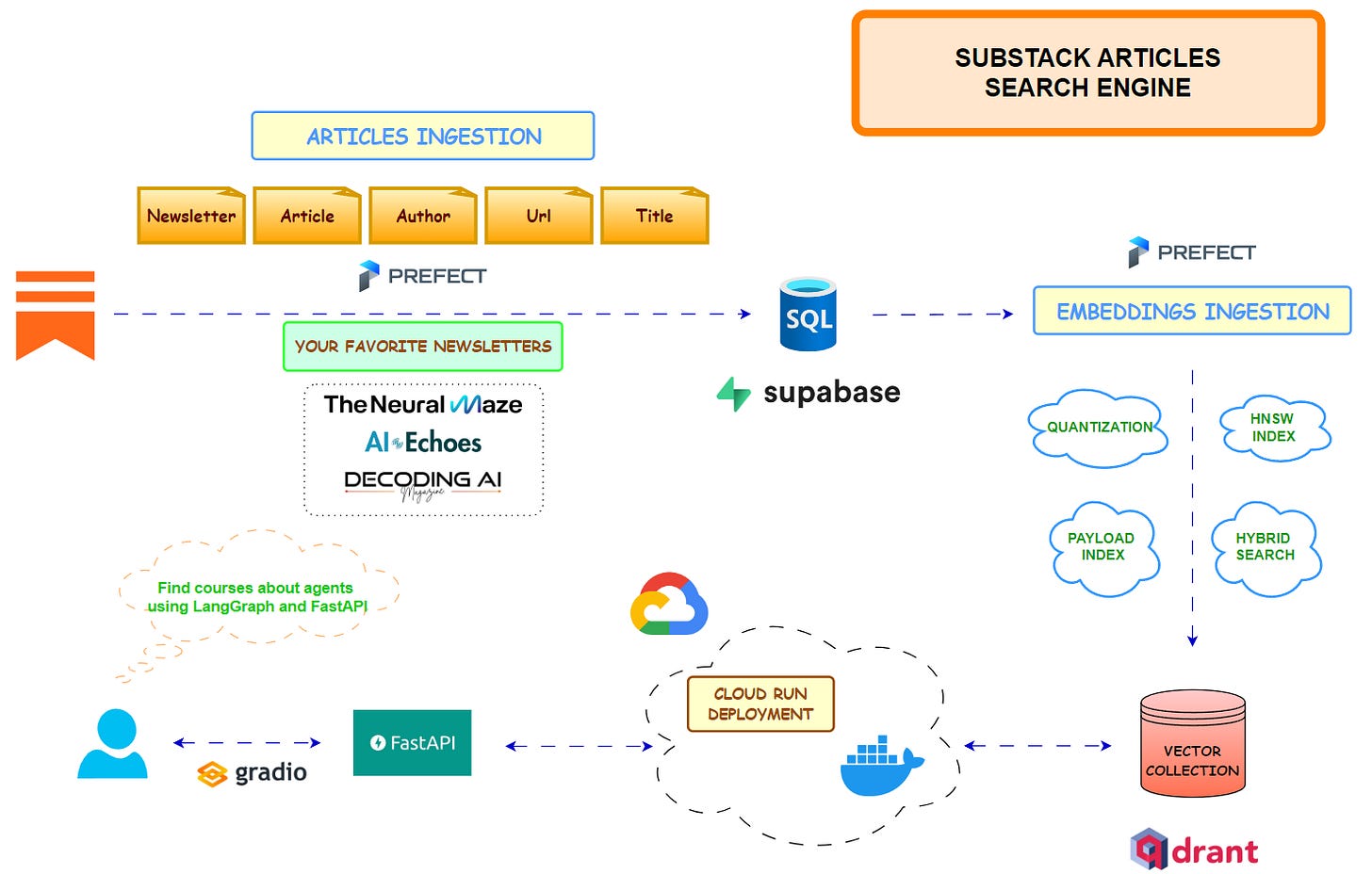

The Substack Articles Search Engine course became a five-part deep dive into building production-ready RAG systems. This has been my first course, in collaboration with Miguel Otero Pedrido from The Neural Maze, and I will create new ones in 2026.

Lesson 1 tackled RSS ingestion with paywall detection, batch processing, and structured storage in Supabase. The challenge wasn’t just parsing feeds, it was handling real-world messiness: missing fields, malformed XML, and content that pretends to be articles but isn’t.

Lesson 2 added semantic search with Qdrant’s hybrid dense-sparse embeddings. This is where RAG gets interesting: configuring HNSW indexes, setting up quantization, enabling payload indexes, and making intelligent trade-offs between speed and quality.

Lesson 3 introduced the FastAPI backend with multi-provider LLM support (OpenRouter, OpenAI, HuggingFace) and both streaming and non-streaming responses. The architecture separated concerns cleanly, routes handle HTTP, services manage business logic, and providers abstract LLM implementations.

Lesson 4 deployed everything to Google Cloud Run with a Gradio UI. The system runs almost entirely on free tiers, proving you don’t need enterprise budgets to build serious AI applications.

Video Walkthrough: Miguel Otero Pedrido made a 1-hour walkthrough of the application. You’ll see how all the pieces fit together and get a sense of the system’s performance in action.

The course also spawned a React frontend because sometimes you want complete control over the user experience. Same backend, different interface—that’s what clean architecture enables.

Biomedical GraphRAG: The Breakthrough

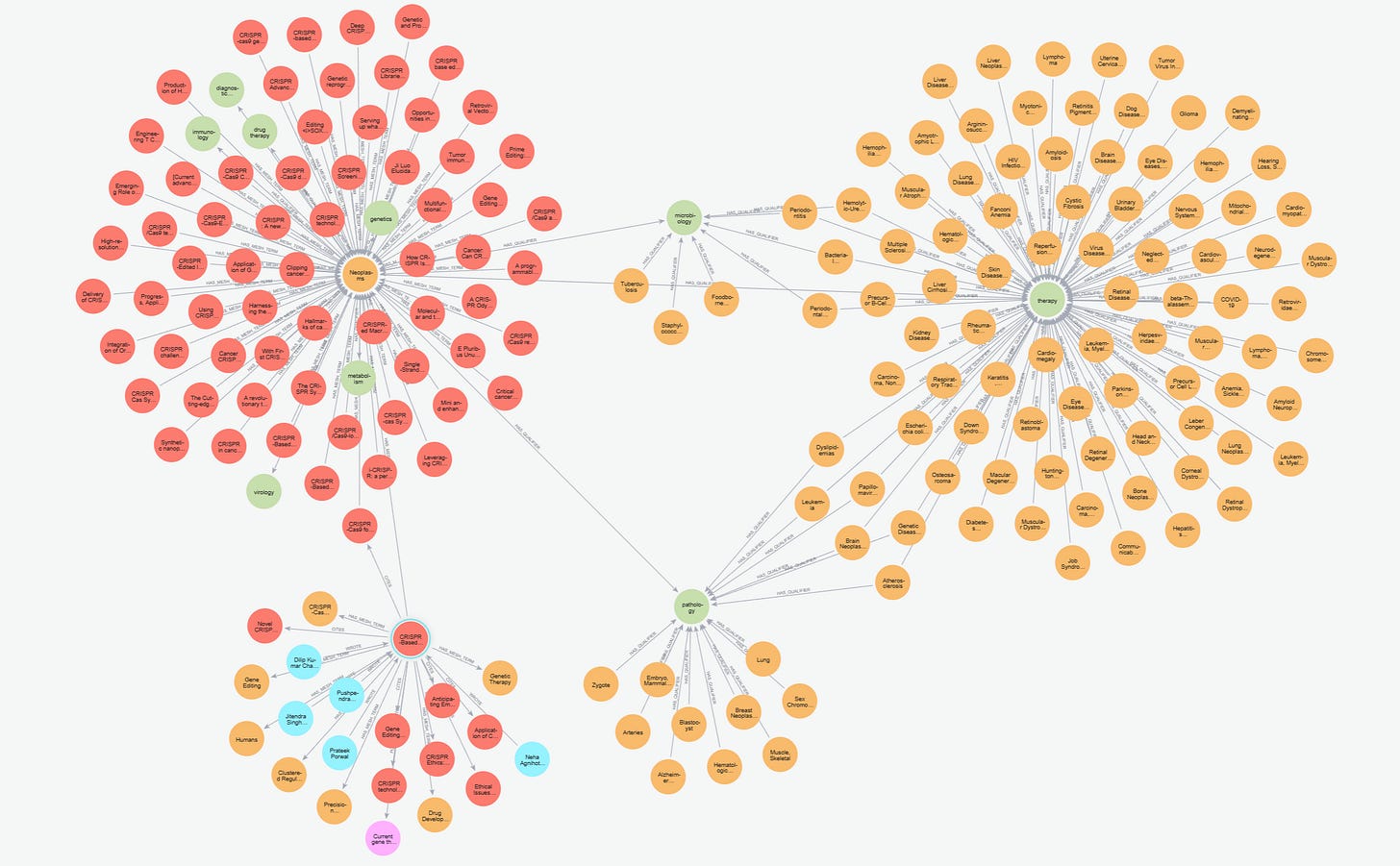

The Biomedical GraphRAG project became something I didn’t expect: viral.

Over 1,600 likes on LinkedIn. 130,000 impressions. My follower count doubled. Apparently, people had been waiting for someone to show how to actually combine knowledge graphs with vector search and tool calling, not just talk about it theoretically.

Here’s why it connected with people.

Vector search is great at finding what papers say, but terrible at understanding how things connect. You can find papers about CRISPR, but you can’t ask “Which collaborators of Alyna Katti have co-authored papers on CRISPR-Cas Systems and Neoplasms?” Vector databases don’t track relationships, they track similarity.

The solution?

Fusion architecture. Neo4j handles the structured knowledge graph, authors, papers, collaborations, citations, and research areas. Qdrant handles semantic search over paper abstracts. An LLM-powered tool-calling layer decides which system to query based on the question type.

When you ask that collaborator a question, the system doesn’t do a semantic search. It traverses the graph: find Alyna Katti → get her collaborators → filter by papers tagged with both CRISPR-Cas Systems and Neoplasms → return names with paper counts. That’s structured intelligence, not similarity matching.

The response validated something I’d been thinking about: the ML/AI community is hungry for production patterns that actually work at scale. Not toy examples, not “hello world” demos—real systems handling real data with real complexity.

It also taught me about technical writing reach. Some articles get a few hundred views. Some touch a nerve and spread. The difference isn’t always content quality—sometimes it’s timing, sometimes it’s solving a problem lots of people have, sometimes it’s just showing something people thought was harder than it actually is.

Agentic Systems and Multi-Agent Orchestration

The Conversational Audio Agents project combined LangGraph’s checkpoint system for state persistence with structured outputs for reliable parsing. Two agents, a researcher and a validator, collaborate to generate and assess content quality, with distinct voices for audio playback.

The architecture demonstrates clean separation: agents focus on their jobs, LangGraph handles orchestration, and checkpoint persistence means conversations survive server restarts. Ask about LangGraph, close everything, come back three days later with a follow-up—the system picks up exactly where you left off.

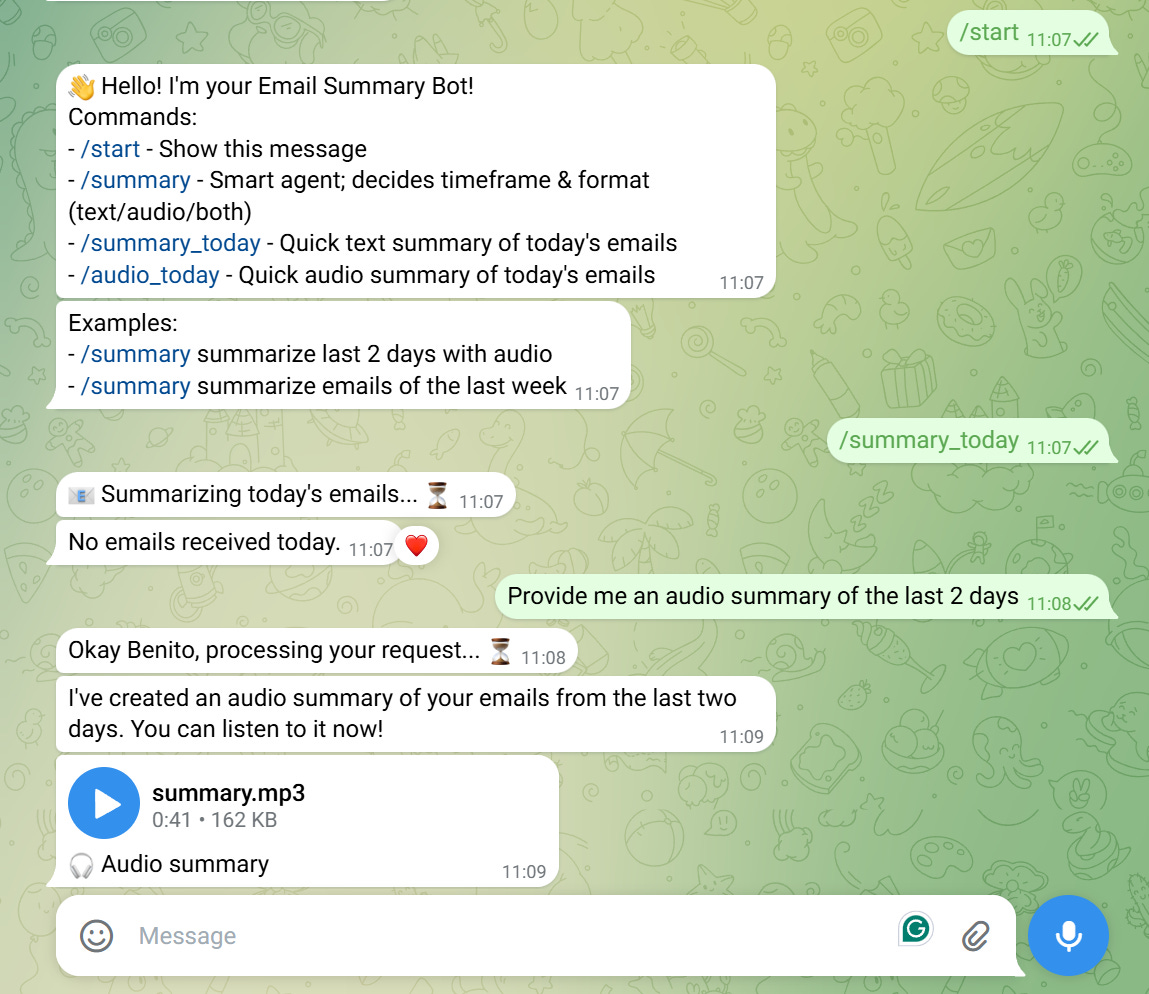

MCP Gmail Bot on Telegram took this further with Anthropic’s Model Context Protocol. The system separates email logic (fetching, parsing, TTS) from orchestration (LLM deciding what to do) from interface (Telegram bot). Change your mind about the UI? Swap it out. The email logic stays untouched.

Tooling

Prek vs Pre-commit benchmarks showed dramatic performance improvements: 1.76× faster installation, nearly 7× faster execution. For something that runs on every commit, these savings compound into real productivity gains.

But speed isn’t the only win: crossing the psychological threshold where hooks feel instantaneous means developers stop noticing them. That reduces context switching and removes the temptation to skip checks.

OpenRouter emerged as the unified API platform that solves model fragmentation. One integration, 300+ models, minimal friction.

50+ models are free but rate-limited to 50 requests/day. For experimentation, that’s more than enough. When you need quality, switch to a paid model with one parameter change. No code rewrite, no new API keys, just different model selection.

The platform’s model routing and provider routing features add sophisticated optimization: automatic fallback when primary models hit limits, intelligent routing based on latency or cost, and provider selection that respects data collection preferences.

Copier Template system solved a problem that plagues every developer: keeping project boilerplate consistent as practices evolve.

Templates aren’t scaffolding you walk away from, they’re living contracts between your current best practices and existing projects. When the template evolves, projects can evolve with it. One copier update command propagates improvements to all repositories using that template.

I discovered prek (a Rust-based pre-commit alternative) and wanted it in all my projects. Template change, git commit, tag release, run update commands. Ten minutes later, all projects had the new tooling. That’s the workflow shift that matters.

Later in the Year: One More Collaboration

After launching AI Echoes and building momentum with my own articles, I had one more collaboration opportunity.

In November 2025, I wrote for Pau Labarta Bajo’s Real-World Machine Learning newsletter: Smarter Food Classification with Liquid AI Vision Models. This piece explored fine-tuning LiquidAI’s vision models for food classification. The 450M model jumped from 85.6% to 94.0% accuracy with LoRA training. The project demonstrated that smaller fine-tuned models can compete with larger general-purpose ones when you get the data preparation and training strategy right.

These collaborations—from the June football evaluation piece through the October Substack Course to the November vision fine-tuning—pushed me to write for different audiences and explore corners of ML/AI I might not have tackled in my own newsletter.

Looking Forward

The patterns from these projects share common themes:

Clean separation of concerns: Whether it’s MCP protocol layers, LangGraph agent boundaries, or FastAPI route-service-provider architecture. Systems that separate responsibilities survive changes better than tightly coupled ones.

Observability from day one: Evaluation isn’t a post-launch addition. The football content pipeline embedded metrics throughout: ZenML for orchestration visibility, Opik for LLM observability, and structured logging everywhere.

Optimize where it matters: Qdrant configuration, vector quantization, semantic caching, hybrid search. These aren’t premature optimization, they’re understanding your performance bottlenecks and addressing them systematically.

Build for iteration: Copier templates, multi-model abstractions, modular pipelines. Systems built for change adapt faster than systems built for a single use case.

What’s Next?

2026 will push these patterns further. Agentic systems need better evaluation beyond LLM-as-a-judge. RAG pipelines need smarter context management as conversations grow longer. Multi-modal systems need better tools for handling images, video, and structured data simultaneously.

But the foundation is solid. Clean architectures, observable systems, intelligent optimization, and tools built for iteration—these patterns work regardless of which new model drops next month.

Thank You

To everyone who read these articles, cloned the repositories, asked questions on LinkedIn, or sent feedback—thank you. Your engagement shapes what comes next.

The code stays open source, the tutorials stay public, and the experiments continue. That’s the commitment I made in August, and that’s what 2026 will bring.

More next year,

Benito

I don’t write just for myself—every post is meant to give you tools, ideas, and insights you can use right away.

🤝 Got feedback or topics you’d like me to cover? I’d love to hear from you. Your input shapes what comes next!

Loved our collab, man 🥂 I am happy you started your own thing

Great wrap up. What I respect most is the consistency around patterns that hold up in real systems: clear boundaries between components, observability from day one, and tooling that makes iteration easy. The GraphRAG project is a strong example of practical design. Using a graph plus a vector store plus tool calling gives you reliable behavior on relationship questions, and it keeps the system understandable as it grows. Looking forward to seeing what you build next, and hopefully collaborating in 2026.